The AI wave hits software testing

2024 was a wild ride for anyone working in tech. AI seemed to explode overnight, and suddenly everyone was talking about how it would transform software development and testing. The Model Context Protocol (MCP) came out in late 2024, and that really accelerated things, it standardized how AI models can connect to external tools and data sources, making AI a lot more practical for day-to-day work.

What struck me most was how different this felt from earlier waves of core Machine Learning and Deep Learning. Back then, you needed a strong background in statistics and mathematics to get something useful of it. Now? Pretty much anyone can spin up an AI assistant and get real work done. I have seen product managers, junior engineers, and even folks from completely non-technical backgrounds successfully using AI to solve problems that would have required specialized skills just a couple of years ago.

2025 became the year where we all stopped playing around and started asking the hard questions: Is this actually production-ready? Can we trust these results? How do we validate AI outputs at scale? For me as an SDET, these questions became central to how I approached using AI in my own work.

The non-determinism problem

Here is something that took me a while to fully appreciate: AI models don't work like traditional software. When you run the same code with the same inputs, you get the same output every time. But AI? Not so much.

Large language models generate responses based on probability distributions. They are essentially predicting what comes next based on patterns in their training data. Even when you set parameters to make them more deterministic like temperature to 0, you can still get different results for the same prompt. Floating-point arithmetic variations, execution timing, and how requests are processed can all introduce variability.

I learned this the hard way when I generated a test utility script one day, loved it, and then tried to regenerate something similar the next day with the exact same prompt. Got completely different code. Not wrong per se but was just a little different. That is when it clicked for me—this is fundamentally probabilistic technology.

What does this mean practically? A few things:

First, you can't just take AI-generated code and drop it into production without review. Every output needs validation. For testing work, this means running the generated scripts, checking the logic, and making sure the results actually make sense.

Second, reproducibility is genuinely challenging. That should tell us something about how early we still are in figuring this out.

Third, if you're scaling AI use across a team or organization, you need proper quality gates. Code review processes, automated validation, continuous monitoring; all of it matters more with AI-generated artifacts than with human-written code because the variability is higher.

The probabilistic nature is not a bug but a feature (as we have heard some engineers say ;)) in software , it is just how these models work. Understanding that helps you use them more effectively.

Why AI is actually good at generating code

Despite the non-determinism issue, code generation is one area where AI genuinely shines. I use it almost daily now, and there are solid technical reasons why it works so well.

Verifiable outcomes make all the difference. Code either compiles or it doesn't. Tests either pass or fail. You get immediate, objective feedback. This is huge because it means you can validate AI-generated code programmatically. Run it through a linter, execute your test suite, check if it handles edge cases. The feedback loop is fast and unambiguous.

The training data is rich and structured. Programming languages follow strict rules. There are millions of open-source repositories, documentation sites, and Stack Overflow threads that AI models learn from. The patterns are clear because code needs to be precise to work.

Context boundaries are well-defined. When I ask an AI to generate a Python script to parse test logs, the constraints are clear: Python syntax, specific input format, expected output structure, maybe some library preferences. Compare that to asking “what is the best testing strategy for this feature?” – you can get less straightforward answers.

Modern coding agents have gotten really sophisticated. The Model Context Protocol lets them access your codebase, read documentation, and even execute code to verify it works. It's not just text generation anymore—these tools can iterate based on actual execution results.

I have been doing what people call "vibe coding" more and more. That is where you describe what you want in natural language and let the AI figure out the implementation details. It does not mean you need zero technical knowledge, but it dramatically lowers the barrier and speeds things up.

Python's dominance in test automation

Quick note on Python since I mentioned it, it's become the go-to language for test automation, and that's not by accident.

The ecosystem is fantastic. pytest is probably the most popular framework right now, and for good reason. Clean syntax, over 1,300 plugins, powerful fixtures, great CI/CD integration. You can also use unittest if you want something built into Python's standard library.

But beyond frameworks, Python just makes sense for testing work:

- It is readable. When you are reviewing test code as a team, clarity matters.

- It integrates naturally with ML tools, which is becoming more relevant as we use AI for test analysis.

- It is fast to prototype with. Need a quick script to parse some logs? Python gets you there quickly.

- Cross-platform support is seamless.

The community is massive too. If you hit a problem, someone's probably solved it and written about it already.

The shift to analysis and reporting in senior roles

Here is something I wish someone had told me earlier in my career, as you move into senior SDET roles, you spend less time writing tests and more time analyzing results.

When you are managing test runs across dozens of builds, multiple environments, and thousands of test cases, manual analysis stops being feasible. You are looking at trends, identifying patterns, figuring out what is flaky versus what's a real regression, and then communicating all of this to stakeholders who need different levels of detail to see how risky the product release cycle is moving along or not.

Senior SDETs become the bridge between raw test data and strategic decisions. Engineering Product managers need to know if we are ready to ship. Engineering leads need to understand where quality risks are. Executives need high-level metrics. The ability to quickly generate clear, accurate reports directly impacts these decisions.

Here is where it gets real: modern development cycles are relentless. Continuous delivery means releases are happening constantly. When you are analyzing thousands of test runs to identify patterns, a tool that cuts analysis time from two days to four hours is genuinely transformative. That faster feedback loop means better decisions and fewer bugs reaching customers.

This is where AI has been most valuable to me personally. Not generating test cases (though that is useful too), but helping me build custom analysis tools that adapt as our needs change.

A real example: cross-build regression analysis

Let me walk through a concrete scenario I dealt with recently. We had about 500 test execution runs each across about 10-20 builds, and I needed to identify regressions, track recurring failure patterns (we call them syndromes), and present findings to leadership.

The traditional approach would have been brutal. Parse all those logs manually? Days of work. Try to spot patterns across thousands of data points? Error-prone at best. Format everything into a presentation-ready report? More hours gone. And if requirements changed—like they always do—you are starting over. Yes, we need to code this but that takes time to get it right too.

Here's what I did instead. I wrote out clear requirements in plain English such as:

Analyze test results from builds X through Y. Compare pass/fail rates across all test suites. Group failures by error syndrome. Generate a summary report with overall build quality trends.

As part of the above prompt, I also explained what a “regression” is so that it could capture failures that newly arose between runs.

I fed this to Claude along with the sample data structures from our test results, and it generated a Python script that:

- Parsed our JSON test result files

- Aggregated data across builds

- Performed tabular comparisons

- Created a report highlighting regressions

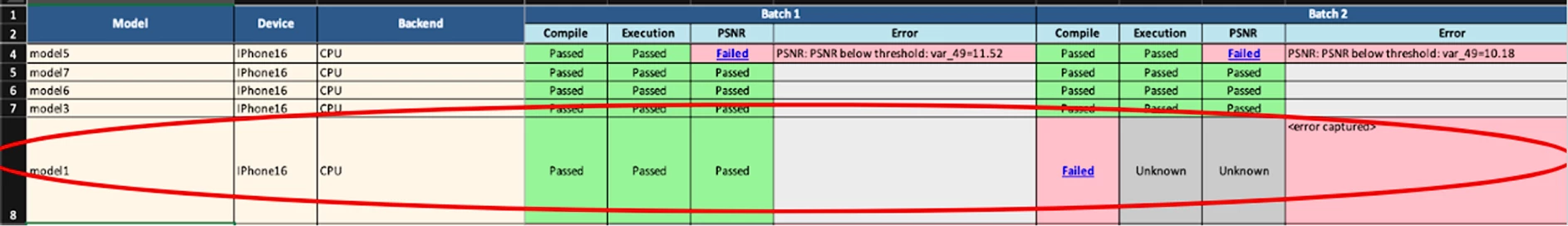

As you see a snippet above from the large report, the AI generated code helped to create a table with information on changes between the builds and potential regressions

The time difference was significant. What would've taken me probably two full days to code from scratch took about three hours total—including time to review the code, fix a couple of edge cases, and run validation tests.

But here's the real win: when requirements changed a week later ("Can you add performance regression detection?"), I just provided the existing code as context and asked for that specific enhancement. The AI understood the current implementation and suggested targeted changes. Took maybe 30 minutes versus the several hours it would have taken to implement code from scratch.

The generated code had good comments too, which meant when a teammate needed to understand how syndrome grouping worked, they could read through it without asking me a dozen questions.

Important reality check: AI sped things up dramatically, but my expertise was still crucial. I had to validate the logic, ensure the statistical comparisons were appropriate, verify edge case handling, and make sure the visualizations actually communicated what stakeholders needed to see. AI is a productivity multiplier, not a replacement for understanding your domain.

Why code generation works better than decision-making

This is something I have thought about a lot, because there's a clear pattern in where AI helps me versus where it falls short.

Code generation has clear success criteria. The code runs or it doesn't. The tests pass or they fail. The script produces the expected output or it errors out. This objectivity makes validation straightforward. I can verify AI-generated code the same way I'd verify human-written code—run it, test it, check the outputs.

Testing decisions are fundamentally different. When I am deciding whether to automate a particular test, prioritize one test suite over another, or determine if we have adequate coverage for a feature, there's no simple pass/fail. These decisions involve:

- Risk assessment based on feature criticality and change frequency

- Resource constraints and timeline pressures

- Team expertise and maintenance burden

- Historical data about where bugs tend to appear

- Stakeholder priorities that might shift

AI cannot really help me make those judgment calls because they are contextual and subjective. Sure, it can summarize data or suggest frameworks for thinking through decisions, but the actual decision requires understanding organizational dynamics, technical debt, team capabilities, and business priorities in ways that aren't easily captured in a prompt.

This is why I lean heavily on AI for building tools and generating code, but keep human judgment central for strategic testing decisions.

Lessons Learned along the way

A few practical takeaways from using AI in my SDET work over the past year:

Be specific with context. The more details you provide about your data structures, expected outputs, and constraints, the better the generated code. I've learned to include sample inputs and outputs in my prompts.

Iterate in small steps. Rather than asking for a complete, complex solution upfront, I often start with a basic version and then enhance it incrementally. This makes validation easier and helps me catch issues early.

Always validate outputs. This should go without saying, but run the generated code. Test edge cases. Check the logic. AI makes mistakes, sometimes subtle ones that only show up in specific scenarios.

Use AI-generated code as a starting point. I almost always modify generated code to fit our specific patterns, add better error handling, or optimize for our use case. That's fine—the AI still saved me tons of time.

Keep context when iterating. When asking for enhancements, provide the existing code. The AI can make targeted changes instead of rewriting everything, which preserves the parts that already work.

Document your prompts. When you find a prompt pattern that works well for your use case, save it. You'll use similar patterns again.

Know when to stop. Sometimes you'll spend more time trying to get AI to generate exactly what you want than it would take to just write it yourself. Recognize those moments and switch gears.

Final thoughts

AI-powered testing tools have proliferated over the past year. Playwright's AI agents can generate tests, heal broken tests automatically, and expand coverage with minimal manual effort. These tools have real value, and I am not dismissing them.

But for me, the biggest impact has been in building custom analysis and reporting tools that adapt to my team's evolving needs. Tools that scale with our data, integrate with our specific workflows, and free me up to focus on strategic questions rather than repetitive analysis tasks.

The ability to go from "I need a tool that does X" to having working code in a few hours instead of a few days has genuinely changed how I work. When I reduced regression analysis time from 8 hours to about 45 minutes through an AI-generated analysis script, that wasn't just a personal productivity win, it meant faster feedback to the team and quicker decisions about release readiness.

Here's my take: the most effective SDETs going forward won't be the ones who know how to use AI for everything, but the ones who understand where it adds value versus where human expertise is irreplaceable. Leverage AI for tasks with clear success criteria—code generation, data parsing, report formatting, visualization creation. Keep your judgment central for the strategic stuff—risk assessment, priority decisions, test strategy, stakeholder communication.