Humanity increasingly relies on AI to decide: from hiring to loan approvals.

But are these results truly impartial and fair? Read the new article by @anuvip to learn how to prevent bias.

Artificial Intelligence is revolutionizing the world — automating decisions in hiring, healthcare, finance, education, and even law enforcement. But while AI is often viewed as objective and impartial, that perception can be misleading. At its core, AI doesn’t understand fairness or ethics — it learns patterns from data. And if that data reflects human prejudice or systemic inequality, the AI will absorb and replicate those biases, often without detection. In other words, AI is not inherently neutral; it mirrors the values — and the flaws — of the society that creates and trains it. This is where testers come in: to ensure not only that AI systems work, but that they work fairly.

AI systems learn from historical data. If that data reflects discriminatory trends — like biased hiring decisions, unequal credit approvals, or underdiagnosed health conditions — AI models trained on it will repeat and even amplify those biases.

Real-world examples of AI bias include:

-

Resume-screening tools penalizing female candidates or those from women’s colleges.

-

Healthcare models that underdiagnose conditions in women due to male-dominated training data.

-

Facial recognition tools that misidentify people with darker skin tones far more often than lighter-skinned individuals.

These aren’t software bugs. They are algorithmic injustices — and they demand attention from testers.

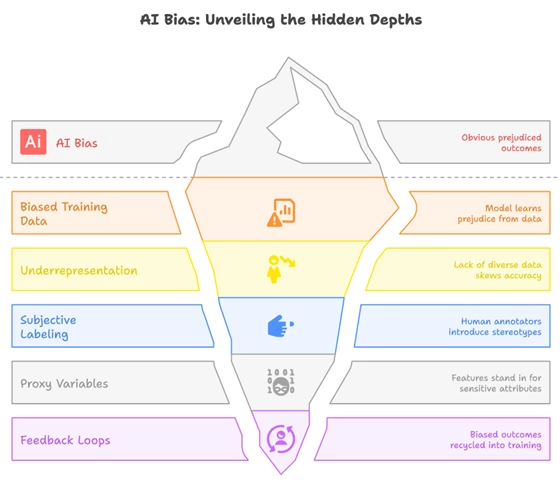

Where Does AI Bias Come From?

Understanding the roots of AI bias is the first step toward addressing it effectively. Bias can creep into a system in several ways:

-

Biased Training Data: If the training data includes prejudice, the model learns it.

-

Underrepresentation: A lack of diverse data (e.g., too few images of dark-skinned faces) skews accuracy.

-

Subjective Labeling: Human annotators may introduce stereotypes unknowingly during data labeling.

-

Proxy Variables: Innocent-looking features (like ZIP codes) can stand in for race, socioeconomic status, or gender.

-

Feedback Loops: Biased models produce biased outcomes, which then get recycled into the training process.

As testers, knowing where bias originates helps us design smarter tests that catch these issues early.

What Testers Can Do: From Observers to Ethical Advocates

In traditional software development, testers are seen as the last line of defense against bugs and breakdowns. They ensure systems meet requirements, function correctly, and deliver a smooth user experience. But with the rise of Artificial Intelligence, the role of a tester is evolving. Today’s testers are not just checking for broken features — they are being called upon to safeguard fairness, accountability, and ethical outcomes. In the context of AI, this means confronting the complex and often invisible threat of bias.

1. Validate AI Behavior Across Different Groups

Testers should begin by checking how AI systems perform across diverse demographic segments — such as gender, race, age, or disability. This process is called disaggregated testing. The goal is to ensure the model delivers consistent results, regardless of the user's identity.

For example, imagine a resume screening tool that is designed to shortlist job candidates. If the system favors male applicants over equally qualified female ones, that’s a sign of gender bias. By testing model behavior separately for different groups, testers can uncover disparities that overall accuracy metrics might hide.

2. Conduct Counterfactual Testing

Counterfactual testing is a powerful technique where testers change just one sensitive attribute (like gender, name, or age) while keeping the rest of the input exactly the same. This helps reveal if the AI model is relying unfairly on that attribute to make decisions.

Example:

-

Resume A with the name "John" is accepted.

-

Resume B, identical in content but with the name changed to "Ayesha", is rejected.

This discrepancy indicates potential bias, because the only difference is the perceived gender or ethnicity of the applicant. If the output shifts due to this single change, the AI is likely using that attribute inappropriately.

3. Use Fairness Metrics as Part of Testing

In traditional QA, we rely on metrics like test coverage, defect density, or response time. When testing AI systems, we need to adopt fairness metrics as well — quantitative ways to measure whether a model treats groups equitably.

Some common metrics include:

-

Disparate Impact: The ratio of positive outcomes between groups. A ratio below 0.8 often indicates bias.

-

Equal Opportunity: Measures if true positive rates (e.g., correctly selected applicants) are equal across groups.

-

Demographic Parity: Evaluates whether all groups have similar probabilities of receiving favorable outcomes.

These metrics offer testers a structured way to measure fairness, just like performance or security benchmarks.

4. Run Adversarial and Edge Case Tests

Testers have always been skilled at finding boundary conditions — scenarios that push systems to the limit. This instinct is incredibly valuable in detecting AI bias. By introducing adversarial examples, testers can probe how models behave in unusual or less common situations that may not have been well-represented in training data.

Examples include:

-

Uploading profile images with varying lighting and skin tones to test facial recognition tools.

-

Using resumes with gender-neutral or culturally diverse names.

-

Entering queries in regional languages or dialects to test NLP models.

This process can reveal blind spots where the AI fails to generalize or behaves unpredictably.

Definition:

Adversarial testing in the bias context refers to deliberately crafting inputs that challenge the model’s assumptions and reveal discriminatory behavior.

5. Automate Bias Regression Testing

AI models evolve. They’re retrained on new data, optimized with fresh parameters, or fine-tuned with user feedback. With each update, fairness can shift — sometimes for the worse. That’s why testers need to treat fairness like any other regression risk.

Just like you re-run smoke tests after a code change, you should re-run bias checks after a model update. Compare fairness metrics, re-run counterfactuals, and track whether your model is getting more — or less — equitable over time.

Example:

A model update improves performance but introduces a new disparity where younger users now get rejected for loans more often. Bias regression testing would catch this unintended side effect.

6. Ask Ethical Questions, Not Just Technical Ones

Being a modern tester means engaging in the ethical conversation. If an AI system impacts human lives — deciding who gets hired, who qualifies for insurance, or who is flagged by law enforcement — testers have the responsibility to ask hard questions like:

-

Should this decision be automated at all?

-

Who might be negatively affected by this model?

-

Are there humans in the loop where they need to be?

Testers must champion transparency, especially when dealing with "black-box" models. If decisions can’t be explained, they can’t be trusted.

A Real-World Example: Amazon’s Hiring AI

Amazon once experimented with an AI tool to automate resume screening. The model was trained on 10 years of historical hiring data — most of it favoring male applicants.

Predictably, the model learned to penalize resumes that included the word “women,” as in “women’s chess club captain.” It began favoring male candidates even when equally qualified female resumes were submitted.

Amazon ultimately shut down the project. But the damage was done — and the lesson was clear: if you don’t test for bias, you build it in.

Testers could have caught this through counterfactual tests, subgroup analysis, and fairness metrics before the model ever reached production.

Tools That Help Testers Test Fairness

You don’t need to build fairness audits from scratch. Several open-source tools can help testers detect and mitigate AI bias:

Incorporating these into your QA stack can make fairness testing part of your day-to-day workflow.

The Ethical Responsibility of Testers

Bias testing isn’t just about technical excellence — it’s about ethical accountability. Testers are in a unique position to advocate for fairness and transparency. We can push back when models aren’t explainable, flag when outcomes are inequitable, and refuse to rubber-stamp biased behavior.

Ask tough questions like:

-

Should this decision even be automated?

-

Who could be harmed by this model?

-

Are we empowering or excluding users?

If your QA checklist doesn’t include fairness, now is the time to update it.

Final Thoughts

In the age of AI, software quality isn’t just about being fast or functional. It’s about being fair.

As testers, we have the opportunity — and responsibility — to ensure that the algorithms we help deliver are as inclusive, ethical, and unbiased as possible.

Because when AI makes decisions about humans, human-centered testing is non-negotiable.

“AI may be a black box, but testing should shine a light into it.”