Testing has always lived under pressure. New features arrive before you finish reading the last requirements. Stakeholders want speed, yet expect zero mistakes. Team members leave, and new members join. New tools and technological breakthroughs emerge continuously. Through it all, testers carry the responsibility of understanding the product, interrogating the system, generating data, designing scenarios, executing checks, analyzing failures, and communicating the much-awaited “truth” about the product status. That too quickly, clearly, and consistently.

A lot of this work is repetitive. A lot of it requires intense attention. Testers want speed but cannot compromise on judgment. They want tools that extend their capability. However, most testing tools often end up creating another task to manage.

AI agents in testing offer a shift. They automate the predictable and static problems. They accelerate the variable and dynamic problems. They can pivot to your context, remember patterns, leverage tools, and help you operate with more clarity and much less cognitive load.

In this article, we will understand AI agents in testing using a simple yet multi-dimensional framework, i.e., 5W1H. What they are, How they work, Why they matter, Where they fit, When to use them, and Who provides them.

By the end of this article, you will be able to:

- Understand the difference between Generative AI and Agentic AI

- List the various components that make up an AI Agent

- Distinguish between the different levels of AI Agents in testing

- Choose the right levels of AI Agents for your work context

- Construct a plan for implementing AI Agents at your workplace

What Are AI Agents in Testing?

The software industry loves buzzwords. “AI assistants”, “AI chatbots”, “Copilot”, “Agent,” “Industry 5.0”, etc. This creates confusion as the differences among these terms are rarely explained. To understand agents, you must begin with an important distinction, i.e., Generative AI and Agentic AI. Let’s know them here.

Generative AI

Generative AI was a technological breakthrough that gained popularity in 2022. It was a quantum leap in technology. It can read long documents and condense them into crisp pointers. It can generate ideas, test scenarios, code, and documentation. It can even explain logs, rewrite steps, point out requirement gaps, and analyze patterns.

But it has a limitation. It cannot act on its advice. It cannot call the tools that it suggests. It cannot execute a plan that it gives. It cannot even prevent its mistakes from happening again.

You ask. It answers. The conversation ends. How one used those answers was mostly up to their agency and skills as a tester. Now, let’s understand how Agentic AI changes this.

Agentic AI

Agentic AI is a natural extension of Generative AI. It has the agency of doing things. It can act on things. It takes the reasoning ability of generative AI and connects it to an action engine. This allows it to:

- Plan task steps

- Call tools (if required)

- Execute actions (by using tools)

- Inspect results (and store them)

- Decide what task to do next (and then do it)

- Retry when things fail (and escalate when they require human judgment)

An AI agent is the actual system that uses these Agentic AI capabilities. It acts like an assistant who can understand your task goals, follow a plan, course correct itself when necessary, work until the job is done or blocked, and notify you in either case.

Types of AI Agents

Just like other software tools, AI agents appear in various types and patterns. These patterns determine their behavior and the problems they’re suited to solve. Here are some of the common types of AI agents:

1. Solo Micro-Task Agent

These are tiny, specialized agents designed to cater to small testing tasks and micro activities. The narrow focus makes them powerful and more targeted to specific, well-defined problems. Think of these agents as personal assistants to handle repetitive micro-tasks. Here are some examples of such micro testing task agents:

- Bug Logger Agent: To capture essential bug details by recording steps, collecting logs, screenshots, console output, network traces, and environmental metadata. It can also format draft bug reports with structured fields, along with business impact and advocacy.

- Test Data Generator: To create structured data sets, random sequences, boundary values, negative values, domain-specific values, and combinations at scale. It can simplify the challenging task of crafting good test-specific datasets.

- Requirement Reviewer: To read requirement documents, or text for ambiguous words (“should,” “may,” “undefined”), contradictory statements, missing acceptance criteria, unclear areas, and highlight them for a tester. It can help testers gain better clarity on the requirements themselves.

2. Orchestrated Multi-Agent Systems

For testing tasks that are too broad, single agents are not enough. Such tasks require multiple dimensions. Multi-agent systems split responsibilities by breaking down the task into multiple small sub-tasks and work collaboratively.

To understand these, let’s take an example of a test strategy drafter agent. Test strategy work requires various aspects of the product such as product elements, quality criteria, project environment, testing techniques, etc.

A test strategy drafter can have multiple agents orchestrating together, such as:

- Product Element Analyzer: To list key flows, features, entry points, dependencies, and user interaction areas. It can model the product from its source code or HTML code, or your wireframe diagrams.

- Quality Attributes Analyzer: To list down key quality attributes such as performance expectations, security obligations, reliability risks, and accessibility concerns that might be written explicitly in the requirements. It may also give a list of potential quality attributes based on the user persona of your application.

- Project Environment Analyzer: To make a realistic strategy and plan considering your project’s delivery timelines, risk appetite, team skills, historical data, and budget constraints. It can align the ideal strategy to your business realities.

- Testing Techniques Analyzer: To suggest techniques, methods (eg, pairwise, model-based testing, risk-based prioritization), and approaches that fit the context and constraints.

Together, these agents can orchestrate their results to produce a base draft for a quick and comprehensive test strategy from a scattered collection of disconnected data.

The agentic chain can either be configured as automatic handoff or manual handoff incase the team wants review loop and better control.

3. Hybrid Human + AI Agent Model

This is the most practical and safest pattern for most testing teams as of today. Here, the responsibilities are split between a human testing expert and an AI agent. Human testing experts handle areas such as quality checks, ambiguity, judgment, prioritization, and risk analysis. On the other hand, the agent is designed to handle repetitive loops, pattern-heavy work, and structured execution.

A good example of it is in test automation and scripting work. Here is how this model works for such tasks:

- Script Generator AI Agent: To draft script methods, actions, libraries, utilities, and test data. Most of these activities are repetitive in nature, and an AI agent can handle them very well.

- Test Expert (Human) for Script Review and Guidance: To evaluate logic, simplify complex flows, add deeper assertions, remove flakiness, and align scripts with project standards. These tasks require an expert judgement and are carried out well by an expert tester.

The benefit of this model is that it allows speed without losing control and quality.

How Do AI Agents Work Internally?

AI agents are often sold as magical entities to non-technical customers. However, as a tester, it is crucial to understand the building blocks of AI Agents. This fundamental understanding is essential to working effectively with these tools. It will expose you to the various possibilities as well as limitations of these tools.

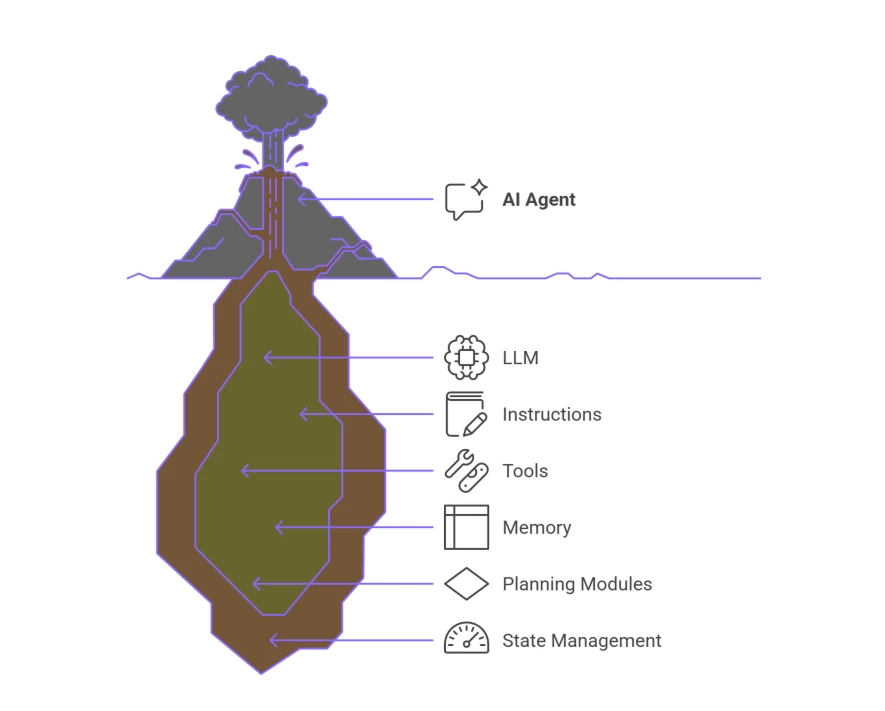

Agents are built using key components. Let’s understand each of them one by one.

- LLM (Large Language Model): This is the core part of most AI Agents. Most Agentic AI tools leverage LLMs internally. These models are used to interpret instructions, analyze context, process tokens, and generate outputs.

- Instructions: This carries the holistic definition of the task to be done, including key aspects such as constraints, rules, style, required formats, allowed tools, examples, exit criteria, escalation triggers, and acceptance criteria.

- Tools: Modern AI agents can use external specialised tools to help them with special task needs. This is very similar to how humans use tools to help them with their tasks. AI Agents can make calls to these tools on a need basis. Tools include APIs, test runners, browsers, code interpreters, file processors, log analyzers, CLI commands, or custom functions. Tools give wings to AI agents.

- Memory: LLMs are stateless by default. This means that each query is processed independently. Memory is used to store context and key details, allowing it to recall past information and interactions. This makes LLMs retain context for multi-step tasks and achieve success with AI agents. Memory can also be used to store your preferences, style, past decisions, downvoted outcomes, etc.

- Planning Modules: They are used to break down tasks into a set of finite steps. Identify backup functions in case the primary task fails. It also reorganizes the instruction chain if any unexpected event occurs. They are designed to handle various decision-making sequences.

- State Management: This module tracks the state information, including progress, errors, retries, and execution limits. It prevents loops from running endlessly as well as keeps the tasks structured.

Figure: Various components that bring an AI agent to life Here is a typical workflow of how an AI agent works persistently using the above components:

- Observe (the current task, environment, and state) →

- Interpret (the input, the instructions, and the surrounding context) →

- Plan (using internal planning modules to break the goal into steps) →

- Act (through LLM reasoning and tool calls that carry out the work) →

- Evaluate (checking tool outputs, constraints, and success criteria) →

- Store (updating memory and state with what was learned) →

- Repeat (until the objective is complete)

-

This repeat loop at step 7 allows agents to complete tasks iteratively.

Why Use AI Agents?

Testing is an engineering loop. It runs continuous cycles till you are satisfied with the state of your product. Below are some activities that typically occur within a testing loop.

Reviewing. Designing. Experimenting. Scripting. Executing. Triaging. Reporting. Repeat.

All these activities consume time. These activities are repetitive by nature. Agents can accelerate the sub-activities that go on within each cycle without replacing the tester’s role.

They allow testers to:

- Think more clearly about their testing goals and activities

- Script and execute tests faster

- Focus on risk and early exploration

- Offload repetitive work to machines

Agents aren’t about replacing testers. They are there to amplify your role as a tester.

Check out Part 2, where I will delve into When to use which level of agent, Where to add AI agents first, and Who provides agentic capabilities