Exploratory testing can feel like a software adventure – you wander through an app, poke at features, and see what surprises pop up. This week’s tip shows how AI can be your friendly sidekick on that adventure. We’ll explain what exploratory testing is, then explore how AI tools (like ChatGPT) can help you generate clever test ideas, write test charters, and even document your findings. Let’s dive in!

What is Exploratory Testing?

Exploratory testing is a flexible, dynamic approach to software testing. Instead of following a strict script of predefined test cases, you explore the software in real time, learning and adjusting as you go. Testers use their intuition and creativity to try various flows and inputs, identifying bugs that scripted tests might miss. In essence, it’s the art of simultaneously designing and executing tests on the fly, which makes it great for uncovering hidden issues in ways formal testing might not.

Think of exploratory testing like freestyle jazz: you have a general theme (the feature or area you want to test), but you improvise and adapt based on what you observe. This approach thrives on curiosity and adaptability – you might start testing one thing and, based on an interesting behavior, veer off to investigate something unexpected. It’s a fun and insightful way to test, but it also means keeping track of your insights and ideas as you bounce around the app.

How AI Can Help Exploratory Testing

AI won’t replace the human creativity and insight that exploratory testing needs. However, it can supercharge your efforts by acting as a brainstorming partner and assistant. Here are a few ways AI tools (like ChatGPT) can help:

-

Brainstorm Creative Test Ideas (Including Edge Cases): Exploratory testing thrives on creativity, and generative AI can help fuel your imagination by suggesting test scenarios you might not think of yourself. For example, if you’re stuck for ideas, you could ask an AI, “What are some unusual or extreme cases to test for our login page?” the AI might suggest things like special character passwords, time-traveling system clocks, or simultaneous logins. This helps ensure you cover those quirky edge cases that otherwise go unnoticed. (One tester shared that after asking ChatGPT about a file upload feature, it suggested scenarios like simulating network interruptions mid-upload and using files with weird characters in their names – ideas the tester hadn’t initially considered)

-

Generate Test Charters and Missions: In exploratory testing, a test charter is a short mission statement or goal for your testing session. It keeps you focused on an objective (for example, “Explore the checkout process for security and usability issues”). AI can help you draft these charters or test ideas tailored to specific quality aspects. For instance, you can prompt ChatGPT with a brief feature description and ask for a charter focusing on security, performance, or another attribute. Generative AI is quite handy at suggesting such focused test missions. This gives you a clear starting point or “roadmap” for your session – without having to brainstorm it all alone.

-

Summarize Findings and Write Reports: After an exploratory testing session, you might have a mess of notes: things you tried, bugs found, odd behaviors observed. Don’t worry – AI can act as your test scribe! You can feed your notes or raw observations to an AI tool, and it can summarize your findings, highlight key points, and even draft a concise report of what you uncovered. This helps turn your exploratory “treasure map” into a clear story for your team. Testers have found that ChatGPT can quickly draft detailed bug reports or test session summaries that are easy for others to understand. No more end-of-day dread writing up test results – the AI handles the boring part while you focus on the next adventure!

Tip: Remember that AI’s suggestions are based on patterns and data it has seen. Always review AI-generated ideas or summaries with a critical eye. They’re there to inspire and assist you, but your judgment as a tester is final. If something the AI suggests doesn’t make sense for your app, you can skip it. If the summary misses an important detail, be sure to add it. Think of AI as a brainstorming buddy and note-taking helper, not as an all-knowing oracle.

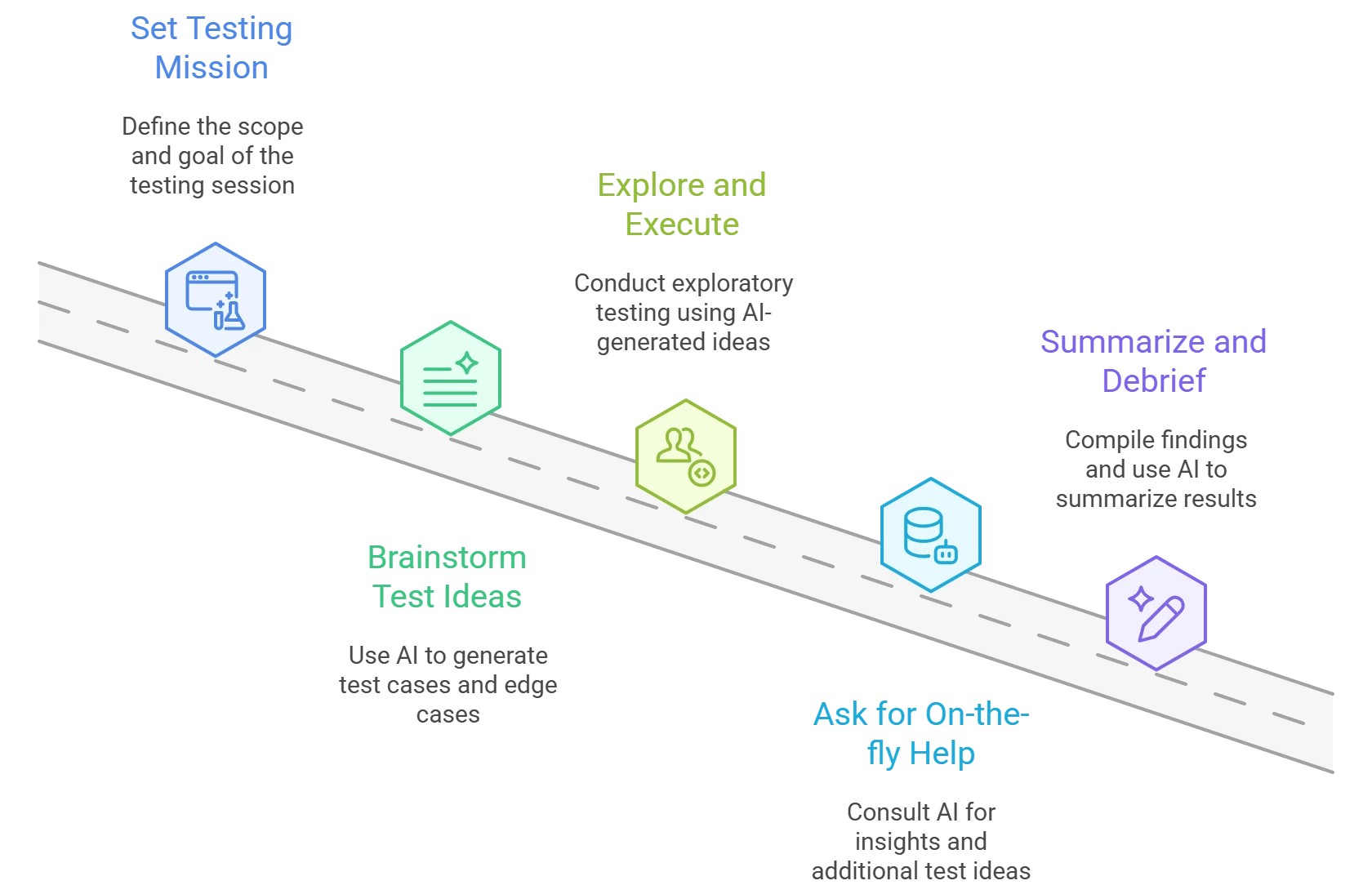

A Simple Workflow for AI-Assisted Exploratory Testing

Ready to give it a try? Here’s a simple step-by-step workflow to incorporate AI into your exploratory testing session:

-

Set Your Testing Mission: Pick a feature or area of the application to explore, and define a goal for your session. For example, you might decide “I will explore the new profile update feature to see how it handles various inputs.” This goal (your test charter) sets the stage. If you’re not sure how to frame it, try asking the AI to help craft a test charter for that feature – it can outline objectives or risks to focus on. Keep the mission focused and time-boxed (e.g. a 1-hour session) so it stays manageable.

-

Brainstorm Test Ideas with AI: Before (or even during) your exploratory testing, use AI to gather test ideas and edge cases. Provide a brief description of the feature or scenario to an AI chatbot, and ask for interesting test cases or “what-if” scenarios. For instance: “I’m exploring the profile update feature – what are some unusual inputs or edge cases I should try?” The AI might suggest things like very long names, emoji characters, SQL injection strings, simultaneous updates from two devices, etc. Jot down the suggestions that seem useful. These AI-generated ideas can expand your testing coverage and spark your own creativity.

-

Explore and Execute: Now, perform the exploratory testing for real. Use the ideas from Step 2 as guidance, but feel free to improvise as you interact with the application. Click through the profile update feature (in our example), try the scenarios the AI gave you (e.g. enter a name with 300 characters or an emoji), and observe how the software behaves. Take notes of anything noteworthy – errors, odd responses, UI glitches, performance slowdowns, etc. This is where your tester instincts shine: follow up on strange behavior, try related variations, and basically probe the app to see where it might break. You’re the explorer here; the AI’s ideas are just a map. If one path uncovers something interesting, chase it down! (And if an AI-suggested test doesn’t reveal anything, no problem – at least you checked that corner.)

-

Ask AI for On-the-fly Help (if needed): As you explore, you might hit a question or want to dig deeper into an unexpected area. This is a great time to pull in AI for a quick consult. Suppose you encounter an error message or an unusual behavior that you don’t fully understand – you could ask something like, “What are common causes of this error?” or “How should a system ideally handle scenario X?” The AI can offer insight or even additional cases to try. For example, if you notice the profile update fails for very long names, you might ask, “What other extreme inputs could I test for a name field?” Think of it like having a testing buddy on call: the AI can suggest further angles (maybe “What about pasting an image file into the text field?”). This on-the-fly brainstorming can lead you to discover related issues. (Of course, if you’re in the zone and don’t need extra help, that’s fine too – this step is optional.)

-

Summarize and Debrief with AI: After your testing session, it’s time to make sense of all the findings. Compile your notes (steps you tried, results, any bugs or oddities). Now let AI help summarize this information. You can prompt your AI assistant with a list of your observations and say, “Summarize these test findings,” or “Draft a bug report for the most critical issue I found.” The AI will transform your exploratory notes into a coherent summary – for example, highlighting that “The profile update feature succeeded with normal inputs, but showed errors with extremely long names and special characters, suggesting inadequate input validation”. It can list the bugs you found with details, or produce a tidy bullet list of outcomes. Use this as a starting point for your report. Double-check the summary against your notes to ensure accuracy (AI might occasionally miss nuances). In the end, you’ll have a clear report of your exploratory session ready to share with the team – with much less writing effort on your part!

Following this workflow, you still do the important thinking and observing, while the AI handles the grunt work of idea generation and documentation. It’s a collaborative approach: you + AI, each doing what you’re best at.

Final Thoughts

Exploratory testing is a human-driven activity at its core – it’s your curiosity, experience, and intuition that find the really interesting bugs. AI is there to assist, not replace that human element. Think of AI as a high-tech tool in your backpack: it’s like having a metal detector that can ping you about areas worth looking at, but you decide where to dig and how to interpret what you find. Used wisely, AI can boost your creativity, catch those “oh, I didn’t think of that!” cases, and handle tedious documentation so you can focus on sleuthing out quality issues.

In short, pairing AI with exploratory testing can make your testing sessions more productive and more fun. You get the best of both worlds – human insight and creativity supported by machine speed and breadth of knowledge. So on your next exploratory testing adventure, bring along an AI sidekick. Happy exploring, and may your bugs be few and your discoveries plenty!

The insights above are drawn from experienced voices in testing and AI, including Xray’s guide on using generative AI for exploratory testing, HeadSpin’s and PractiTest’s tips on AI-driven test idea generation, and real-world examples of testers using ChatGPT to brainstorm edge cases and improve documentation. Exploratory testing concepts are based on established definitions and practices in the testing community. Enjoy experimenting with these ideas in your own workflow!