Editor’s note: This article was originally published in TechWell Agile Connection.

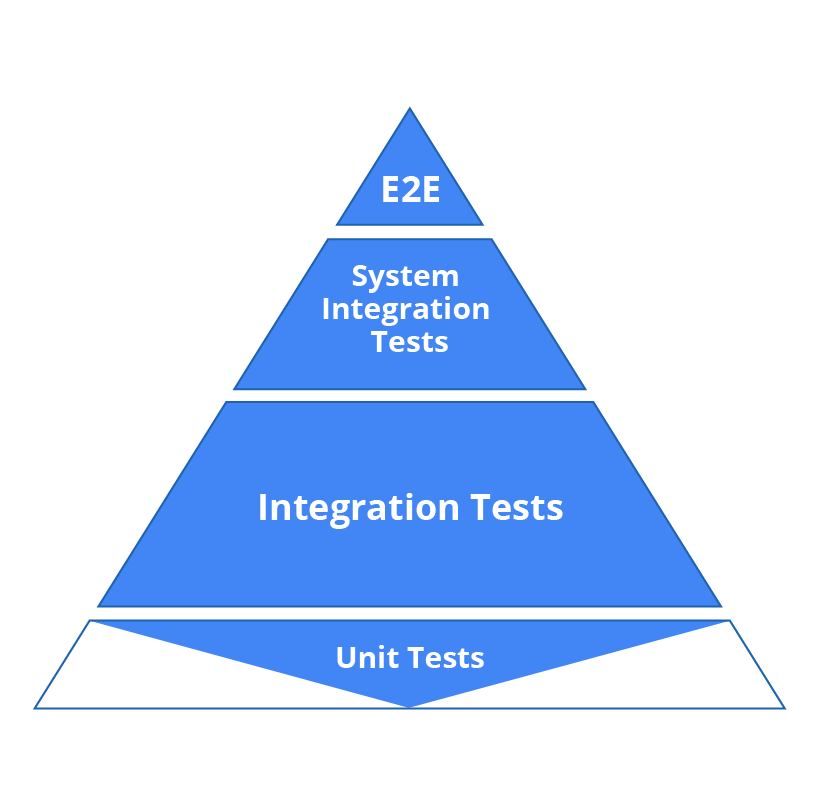

It’s hard to dispute that the test pyramid is the ideal model for Agile teams. Unit tests form a solid foundation for understanding whether new code is working correctly:

- They achieve high code coverage: The developer who wrote the code is uniquely qualified to cover that code as efficiently as possible. It’s easy for the responsible developer to understand what’s not yet covered and create test methods that fill the gaps.

- They are fast and “cheap”: Unit tests can be written quickly, execute in seconds, and require only simple test harnesses (versus the more extensive test environments needed for system tests).

- They are deterministic: When a unit test fails, it’s relatively easy to identify what code must be reviewed and fixed. It’s like looking for a needle in a handful of hay versus trying to find a needle in a heaping haystack.

However, there’s a problem with this model: the bottom falls out when you shift from progression to regression testing. Your test pyramid becomes a diamond.

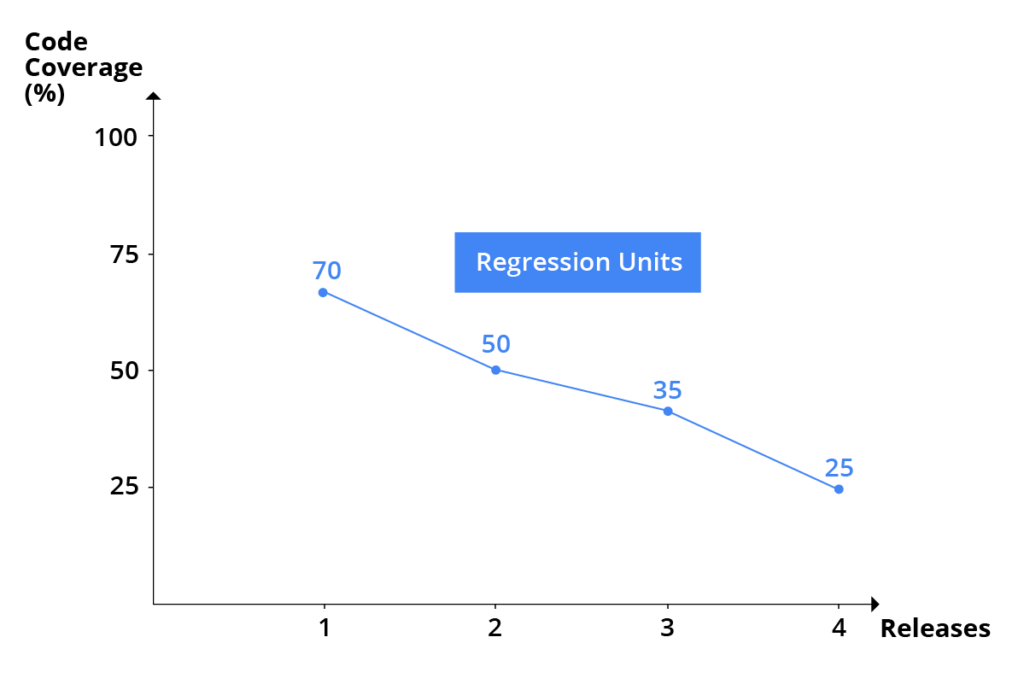

At least, that’s what surfaced in the data we recently collected when monitoring unit testing practices across mature Agile teams. In each sprint, developers are religious about writing the tests required to validate each user story. Typically, it’s unavoidable: passing unit tests are a key part of the “definition of done.” By the end of most sprints, there’s a solid base of new unit tests that are critical in determining if the new code is implemented correctly and meets expectations. These tests can cover approximately 70% of the new code.

From the next sprint on, these tests become regression tests. Little by little, they start failing—eroding the number of working unit tests at the base of the test pyramid, and eroding the level of risk coverage that the test suite once provided. After a few iterations, the same unit tests that once achieved 70% risk coverage provide only 50% coverage of that original functionality. This drops to 35% after several more iterations, and it typically degrades to 25% by the time 6 months have elapsed.

This subtle erosion can be extremely dangerous if you’re fearlessly changing code, expecting those unit tests to serve as your safety net.

Why Unit Tests Erode

Unit tests erode for a number of reasons. Even though unit tests are theoretically more stable than other types of tests (e.g., UI tests), they too will inevitably start failing over time. Code gets extended, refactored, and repaired as the application evolves. In many cases, the implementation changes are significant enough to warrant unit test updates. Other times, the code changes expose the fact that the original test methods and test harness were too tightly coupled to the technical implementation—again, requiring unit test updates.

However, those updates aren’t always made. After developers check in the tests for a new user story, they’re under pressure to pick up and complete another user story. And another. And another. Each of those new user stories need passing unit tests to be considered done—but what happens if the “old” user stories start failing? Usually, nothing. The developer who wrote that code will have moved on, so he or she would need to get reacquainted with the long-forgotten code, diagnose why the test is failing, and figure out how to fix it. This isn’t trivial, and it can disrupt progress on the current sprint.

Frankly, unit test maintenance often presents a burden that many developers truly resent. Just scan stackoverflow and similar communities for countless developer frustrations related to unit test maintenance.

How to Stabilize the Erosion

I know that some exceptional organizations require—and allocate appropriate resources for—unit test upkeep. However, these tend to be organizations with the luxury of SDETs and other development resources dedicated to testing. Many enterprises are already struggling to deliver the volume and scope of software that the business expects, and they simply can’t afford to shift development resources to additional testing.

If your organization lacks the development resources required for continuous unit test maintenance, what can you do? One option is to have testers compensate for the lost risk coverage through resilient tests that they can create and control. Professional testers recognize that designing and maintaining tests is their primary job, and that they are ultimately evaluated by the success and effectiveness of the test suite. Let’s be honest. Who’s more likely to keep tests current: the developers who are pressured to deliver more code faster, or the testers who are rewarded for finding major issues (or blamed for overlooking them)?

In the most successful organizations we studied, testers offset the risk coverage loss from eroding unit tests by adding integration-level tests—primarily at the API level, when feasible. This enables them to restore the degrading “change-detection safety net” without disrupting developers’ progress on the current sprint.