Below is a recap of our webinar with

Let's dive into the topic of continuous delivery and its impact on software development. You've likely come across this term before, perhaps through books like the one Fave Farley co-authored with Jess Humble or through reports like the State of DevOps, which has extensively studied software development methodologies and found continuous delivery to be highly effective.

The approach outlined in the book "The Science of DevOps Accelerate," led by Nicole Fosgarant, offers a rigorous analysis of software development practices. Fosgarant, drawing from her academic background, applied robust statistical modeling techniques to uncover profound insights into software development.

One of the key findings of this research is the effectiveness of continuous delivery in improving software development processes.

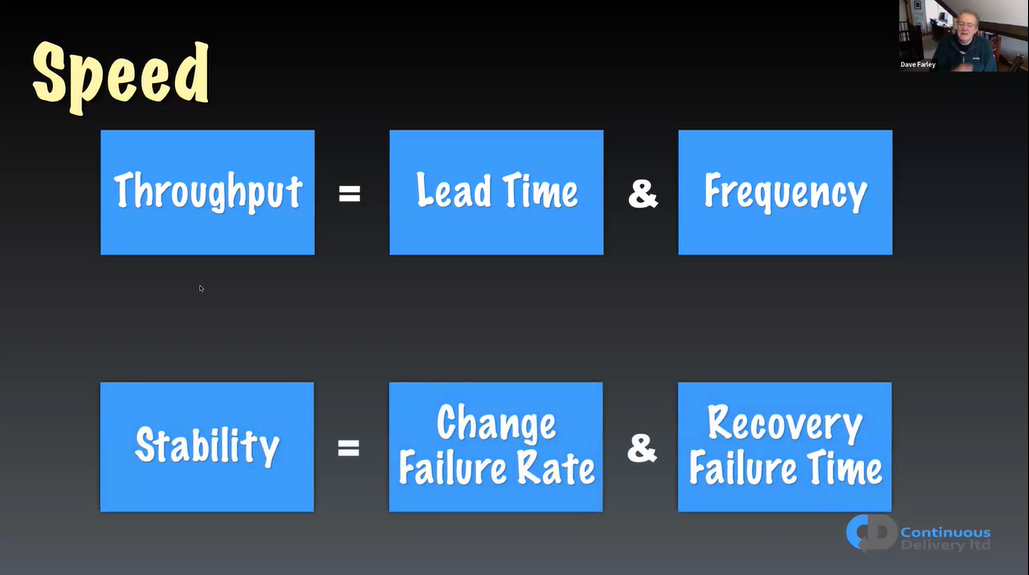

By choosing generic measures like throughput and stability, Fosgarant and her team were able to provide valuable insights that apply across various industries and technologies.

Stability is evaluated through the change failure rate and recovery time. These metrics serve as indicators of software quality, revealing insights into the resilience and robustness of the development process.

By examining efficiency and quality, we gain a comprehensive understanding of software development practices. These insights transcend specific technologies or business domains, offering universally applicable principles for development.

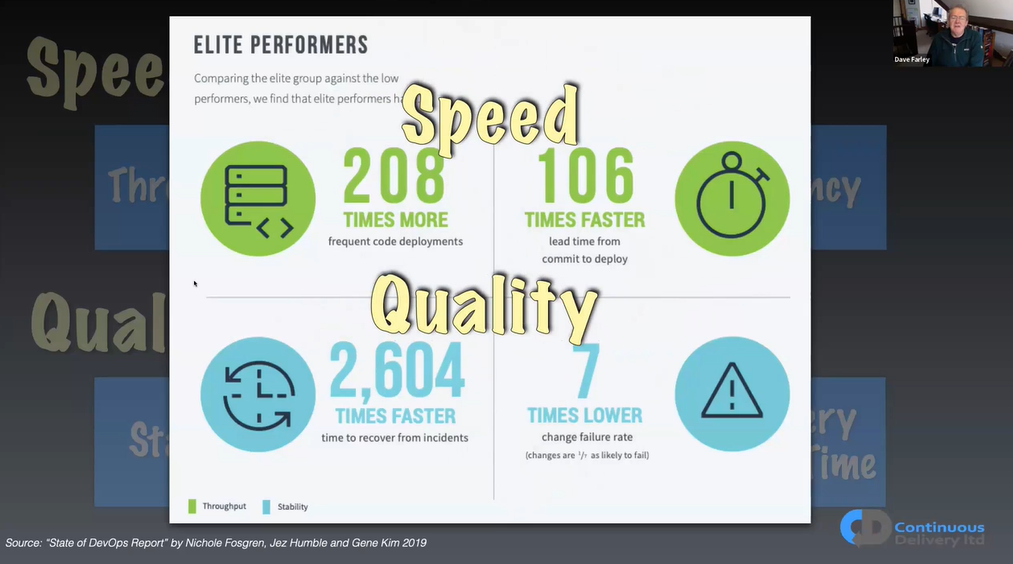

Among the findings is the identification of distinct performance tiers, with elite performers showcasing remarkable speed and quality. The gap between elite performers and low-performing counterparts is staggering, highlighting the profound impact of optimized development practices on overall outcomes.

What we're talking about here is how fast we work and how good our products turn out. The research shows that to work fast, we need to make sure our work is of high quality, and the other way around too. These two things go hand in hand. It might be surprising because many people think you have to choose between working quickly and making good stuff. But that's not true.

Think about it like this: If I rush through my work today and make mistakes, tomorrow's work will suffer because of those mistakes. So, if we want to work faster, we have to make sure our work is top-notch.

In continuous delivery, this means we make progress bit by bit, always checking to see if we're on the right track.

In simple words, speed and quality work together. They help each other out as we try to make great software.

So there is no trade off between speed and quality.

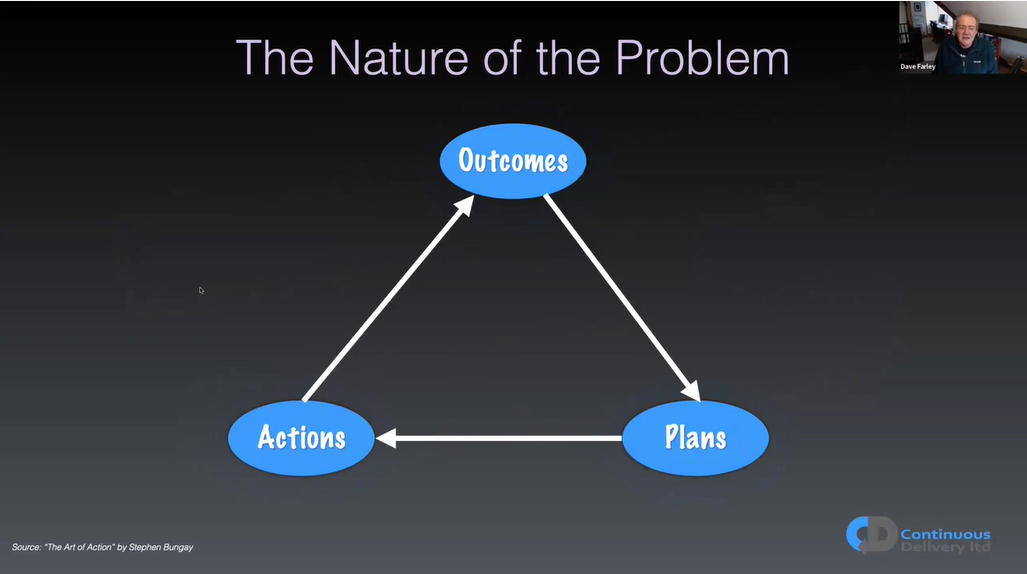

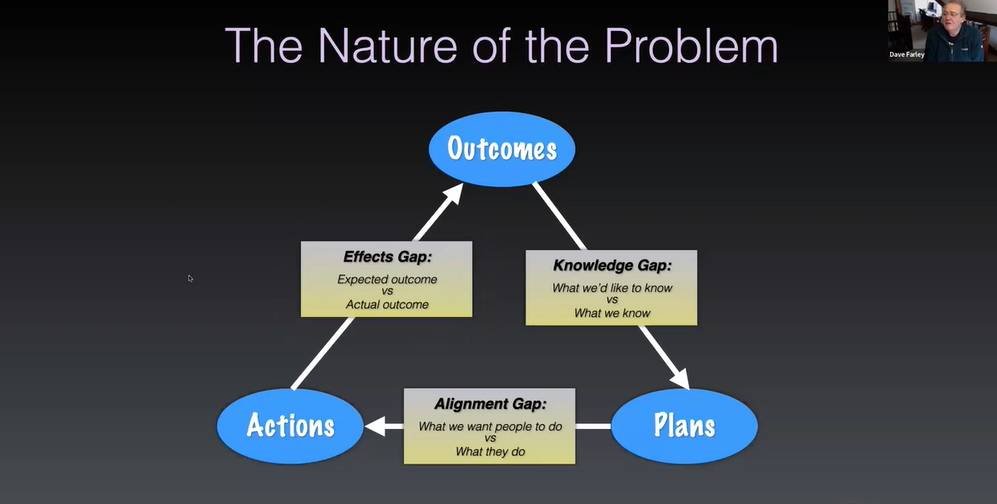

Another perspective on this matter stems from Stephen Bungay's book, "The Art of Action." Bungay posits that to attain a desired outcome, one must devise a plan.

This plan will entail a sequence of actions, ultimately aimed at realizing the desired outcome. However, inherent in this process are several challenges or "gaps."

The first is the knowledge gap, which represents the disparity between the ideal information needed to formulate a robust plan and the actual information available. It's crucial to acknowledge that achieving a perfect plan is unattainable due to the inherent limitations of knowledge and foresight.

Then there's the gap between plans and actions, known as the alignment gap. It's about what we expect people to do compared to what they actually do. Sometimes, despite our plans, things change, and people take different actions.

Lastly, there's the effects gap, which is the gap between what we expected to happen and what actually happened. Since we can't predict the future perfectly, this gap is inevitable.

Traditional responses to these gaps often involve more planning, analysis, and detailed requirements to overcome the knowledge gap. But this can slow things down and reduce quality, creating a cycle of slower progress.

Similarly, efforts to address the alignment gap often involve micromanaging people or implementing stricter processes, which also tend to slow things down and reduce quality.

As for the effects gap, some strategies involve managing expectations or increasing project management rigor. However, these approaches can also lead to slower progress.

So, what's the alternative? Instead of seeing speed and quality as trade-offs, we should view them as mutually reinforcing. To move quickly, we need high-quality systems, and to achieve higher quality, we should work in smaller, more frequent, and more incremental steps.

So, the problem is we can't completely get rid of these gaps. But we can work to make them smaller, which is a good thing.

One way to make these gaps smaller is by speeding up the process. We do this by making the loop—the cycle of planning, acting, and seeing results—go faster. That's why working in small steps is important.

Continuous delivery is all about this idea of making progress in small steps. By taking small steps with good quality, we can learn and improve at each stage. This gives us more control over the process.

But to do this well, we need to be able to change our software safely and easily. Being able to change the software is a key measure of its quality. Without this ability, it doesn't matter how secure or resilient our software is—we won't be able to fix it or improve it easily.

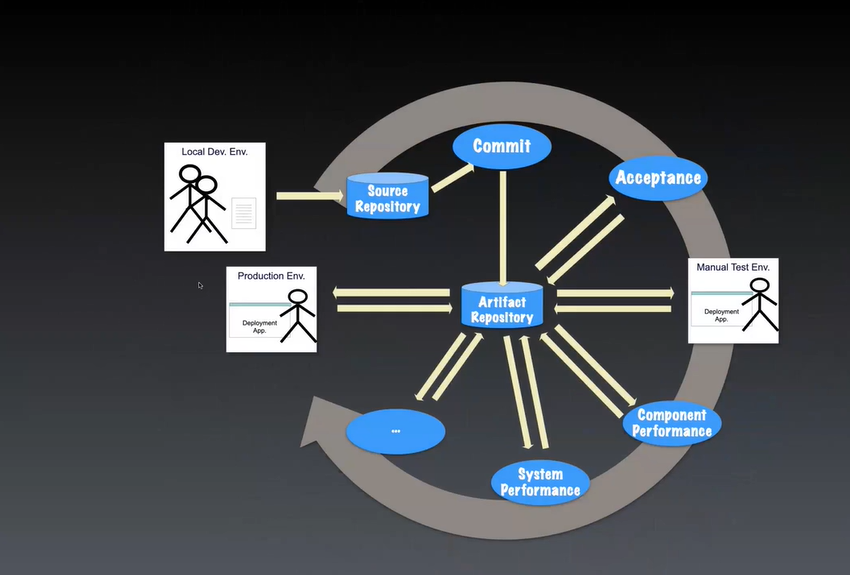

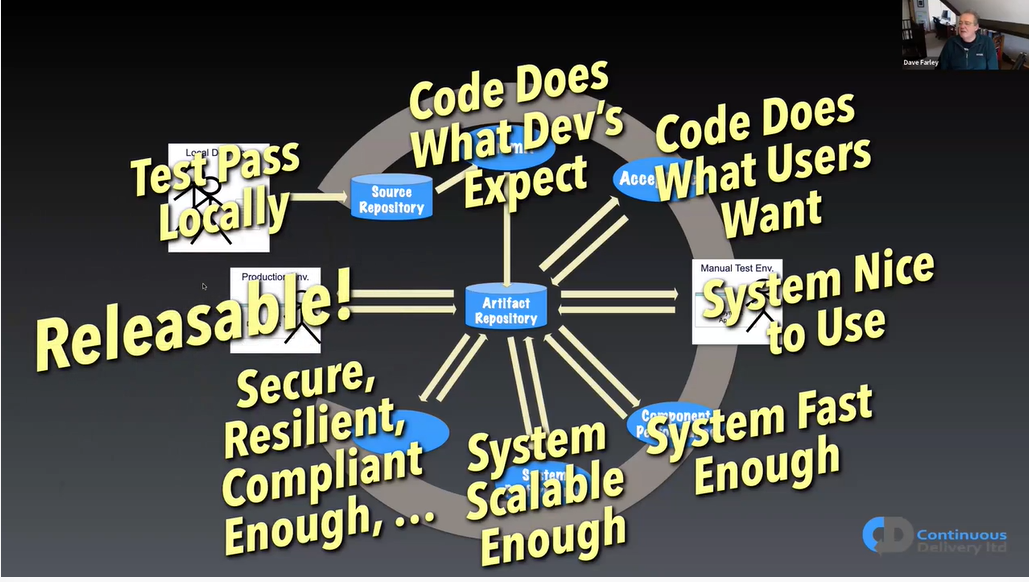

Continuous delivery is about making sure our software is always in a state where we could release it if we wanted to. This means it's good enough to put into production at any time. We use automation, like deployment pipelines, to help us know when our software is ready to release. These tools give us quick feedback on the readiness of our system for release.

To achieve that, development teams need quick feedback on their small changes. They use tests to make sure everything works as expected. First, they check if their changes pass tests on their own computers. Then, they do a more thorough check by running all the tests to catch any unexpected problems and ensure the code meets coding standards and security requirements.

But that's not all. They also need to make sure the code does what users want. So, they add more tests, like acceptance tests, to simulate how users interact with the software in real-life situations.

Finally, they want to make sure the software performs well and meets user needs, like being fast, secure, stable, and scalable.

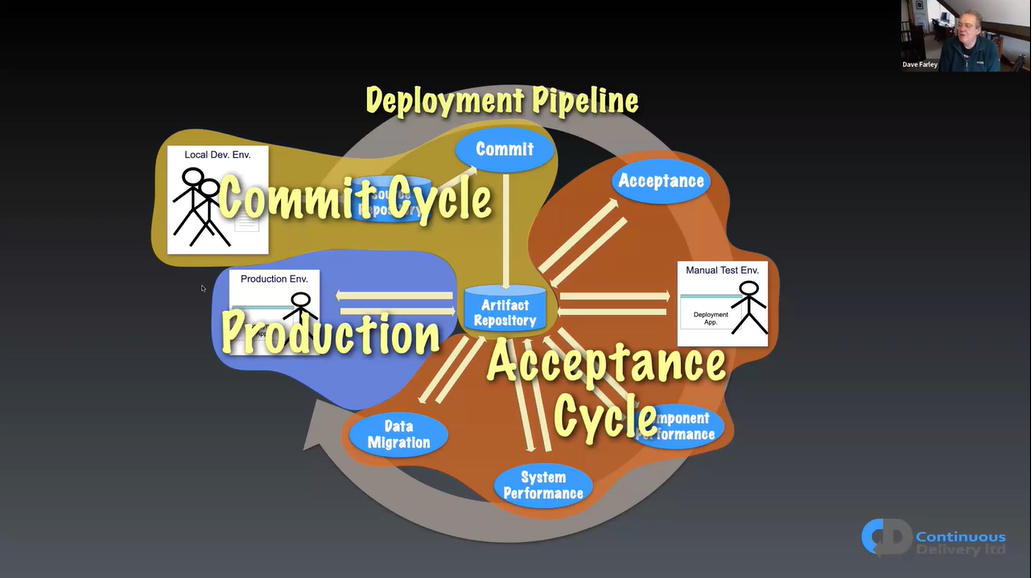

The deployment pipeline is like a machine that decides if our software is ready to release. We make changes, create a release candidate, and then check if it's good to go. If the pipeline says it's ready, that means we don't need any more work or approvals.

This process gives us confidence in our software because we test it thoroughly. Testing means using automated tests, which are like blueprints for what our software should do. We write these tests before we even write the code, which is a smart way to build software.

By doing this, we can learn and improve quickly. We use tools like deployment pipelines and automation to make changes, test them, and see if they work as expected. This lets us try out new ideas in a safe way.

Continuous delivery means always having software that's ready to release. This allows us to experiment and see what works on a small scale before releasing it to everyone.

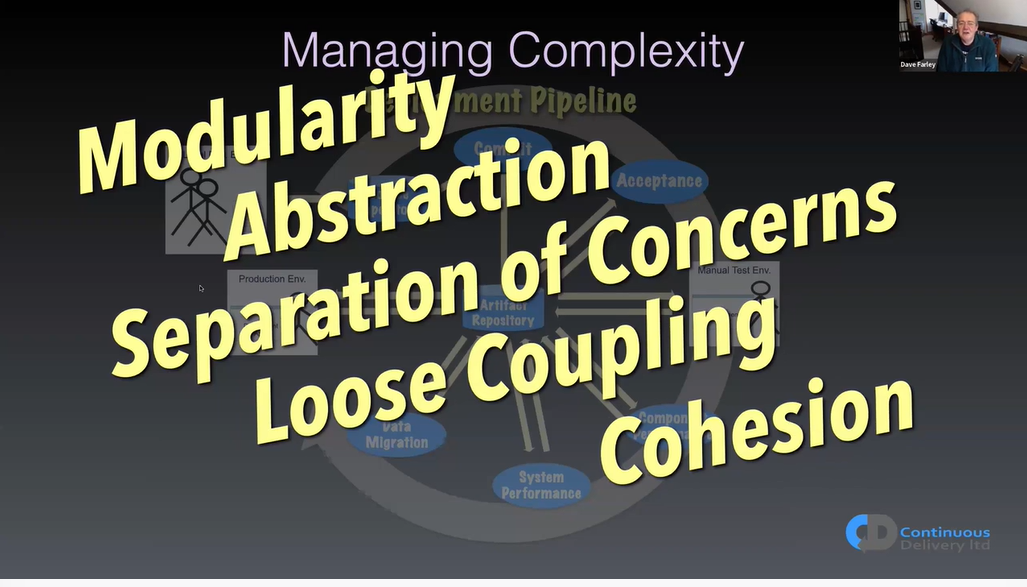

Engineering software is like solving a puzzle. It's complicated, so we need to be good at learning new things and managing this complexity. One way to manage complexity is by building modular systems—pieces of software that can be changed without affecting other parts.

To make our software easier to work with, we split it into smaller parts. These parts talk to each other without needing to know all the details of how the other parts work.

We make sure each part of the system focuses on doing one thing really well. This helps keep things organized.

We also manage how tightly these parts are connected. We prefer them to be loosely connected when it makes sense. And we keep related stuff in the code close together.

This is how the deployment pipeline works. It follows these principles to help us manage our software better.

Here's how it works: First, we make a change to our software, whether it's adding a new feature, fixing a bug, or making an improvement. Then, we quickly check to see if the change looks okay—this is like a quick test to make sure nothing obvious is broken.

But that quick check isn't enough to make sure our software is ready to go live. So, we move on to the next step.

In this next step, we put our change through more thorough tests. These tests are like a safety net, making sure our software works well, performs smoothly, and is secure enough to be released to users. We might check how fast it runs, how well it can handle lots of users at once, and if it's safe from hackers.

If all these tests pass, it means our change is ready to go live. We can choose when to release it into the real world, whether it's right away or at a later time that's more convenient. When we do release it, we might use different techniques to make sure everything goes smoothly—for example, we might release it gradually to different groups of users or keep a close eye on it once it's out there.

Each step in this process—making changes, testing them, and releasing them—has its own cycle. The first cycle is about making and checking changes. The second is about testing for release readiness. And the last one is about getting the software into production and supporting it there.

Continuous delivery is about making this whole process better and faster. It's about improving our software and getting it to users quickly so they can benefit from the latest updates and improvements.

Continious delivery at Amzon & Tesla

Let's take a closer look at how Amazon and Tesla embrace continuous delivery practices to enhance their operations.

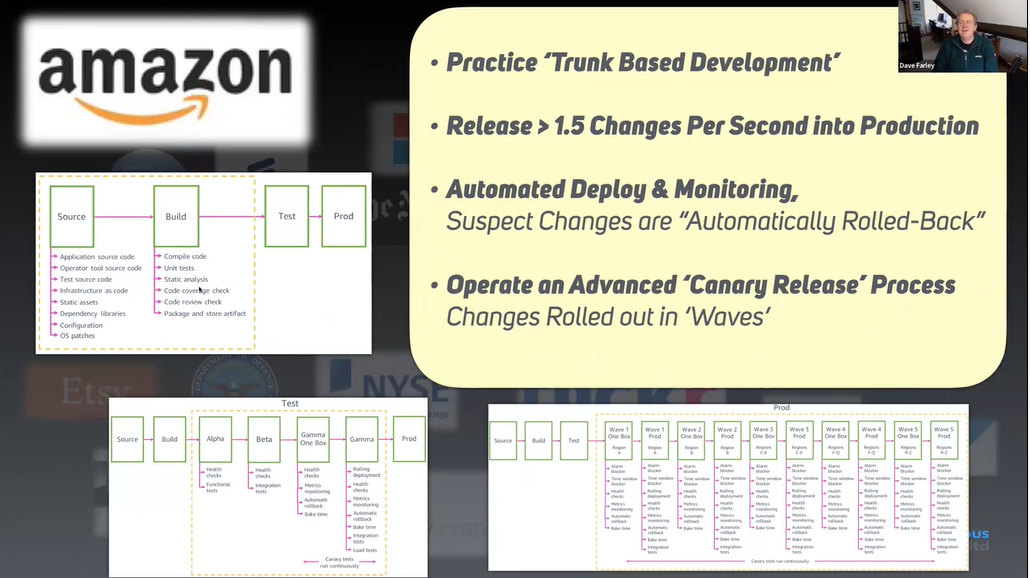

Amazon is renowned for its commitment to continuous delivery.

They follow a method called trunk-based development and impressively release about 1.5 changes per second into production. Their approach includes automated deployment and monitoring for every change made to production. If anything seems amiss, they automatically roll back the change to ensure smooth operations. Amazon also incorporates experimentation into their deployment process. They include a description of the experiment they're conducting and how they measure its success. If the experiment doesn't meet their success criteria, such as generating more revenue or increasing sign-ups, they roll back the change.

Similarly, Tesla adopts a continuous delivery approach throughout its operations.

Picture the Tesla factory as a colossal robot controlled by software, operating under continuous delivery principles. From the software in the cars to the hardware and the factory itself, all aspects are developed using continuous delivery practices.

One intriguing story from Tesla involves Joe Justice, who worked there at the time. Joe and his team embarked on a mission to increase the maximum charge rate of a Tesla Model 3 from 200 to 250 kilowatts. They conducted experiments, initially testing various manual adjustments. Eventually, they identified the necessary changes to the car's charging circuit, including increasing the diameter of some copper rods and rerouting the circuit design. After implementing these changes in the car's software and conducting thorough testing, they achieved success. Within a single day, including manual experimentation and software updates, the production line was producing new cars with the upgraded maximum charge rate.

This story underscores the power of applying engineering principles in software development. By prioritizing high-quality work and maintaining the flexibility to adapt software easily, continuous delivery enables remarkable achievements like producing better software faster.

Check out the webinar recording to explore more examples of companies implementing continuous delivery approaches.

Continue delivery, simply builds better software faster. That's what it does.