Below is a recap of our webinar with an industry expert

Load testing is pivotal for integrating performance considerations early in the software development process. Unlike functional testing, load testing focuses on efficiency, resource utilization, and error prevention under multiple users.

Load testing requires a unique mindset, centered on understanding technology stacks and protocols rather than graphical user interfaces.

Process Model Overview

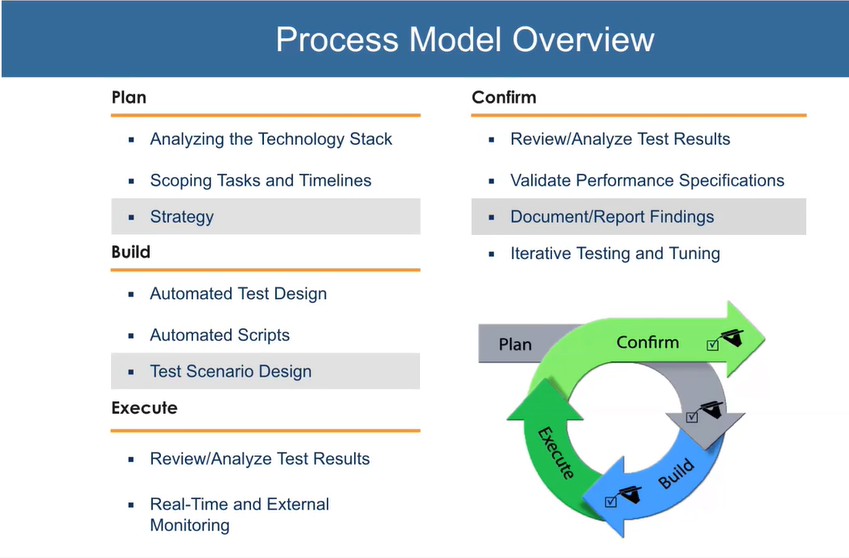

The load testing process is simplified into a four-step model: planning, building, executing, and confirming.

-

Planning: In this stage, the focus is on determining the worst-case scenario under realistic conditions. The goal is to identify the perfect storm that could occur in real-life situations. Research on the application's makeup, scope, and time constraints is essential. Here is important the 80-20 rule, aiming to test the most impactful processes.

-

Building: Once the plan is set, the next step involves getting sign-off and building automated tests for the identified business processes. This includes setting up test scenarios to simulate the identified perfect storm. The build stage encompasses all preparations leading up to initiating the load test, including the setup of monitoring.

-

Executing: The execution stage involves running the load test and analyzing the results. Real-time monitors and external tools are used to assess whether the application meets its operational and performance requirements.

-

Confirming: The final step is confirming whether the load test results align with the business goals. Documentation is crucial, ensuring that the results tell a coherent story rather than being presented as raw charts and graphs. The entire process is cyclical, encouraging repetition for continuous improvement.

The model is applicable not only to large-scale integrated load tests but also at a granular level, such as for developers conducting quick load tests for specific functions.

Making Goals Measurable

In the context of load testing, a common challenge in many companies is the lack of agreement on the test's goals. Don’t attempt to address multiple objectives in a single load test, focus on specific aspects. Key considerations include prioritizing either the application software or hardware and infrastructure.

The main metrics to measure during a load test include:

-

System Capacity: Determining the number of concurrent users the system can handle.

-

User/System Experience: Assessing user experience or system operations, monitoring transaction numbers or error rates.

-

Response Time: Evaluating the end-to-end response time from an end user's perspective, including browser rendering times.

-

Infrastructure Costs: Monitoring CPU, memory, disk, and network resources to ensure they remain plentiful under maximum load.

-

Error and Defect Thresholds: Establishing acceptable error thresholds, tailored to the context of the application and its regulatory requirements.

Scott encourages setting one or, at most, two objectives for a load test, as achieving those may naturally address additional considerations.

Good Performance Requirements

Effective requirements facilitate clear reporting on whether a test is a pass or fail. It is important to avoid vague requirements like "we want it to be fast" and instead recommends specifying measurable criteria. Examples include defining response times under certain load conditions, resource utilization metrics (e.g., CPU utilization or queuing), and failure rates.

What to Test

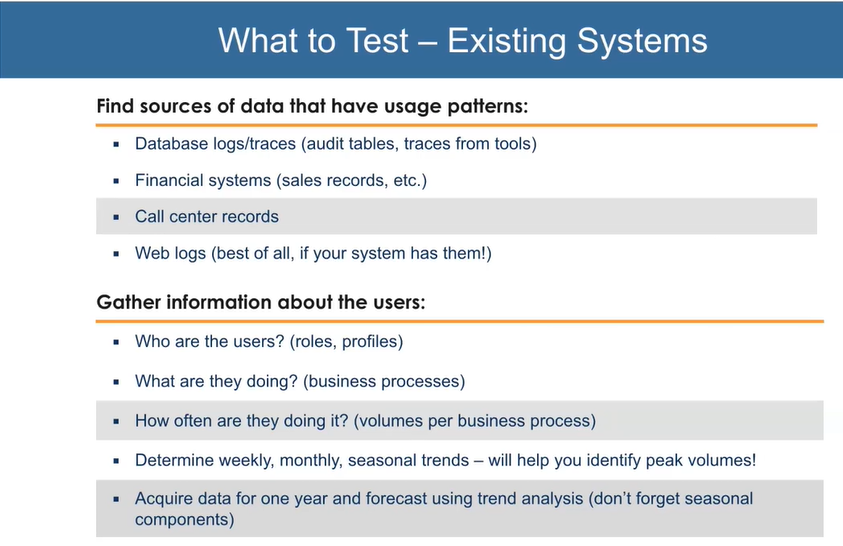

Addressing the question of what to test in load testing, you should avoid blindly inheriting scripts from other testing teams. Instances may happen where inherited scripts are not aligned with actual user behaviors, leading to issues slipping through to production.

What to Test - New Systems

To determine what to test, obtain information from various sources, including web server logs, call center interactions, and discussions with functional QA teams. Key questions include identifying user roles, understanding user actions, determining frequency, and recognizing any patterns or trends.

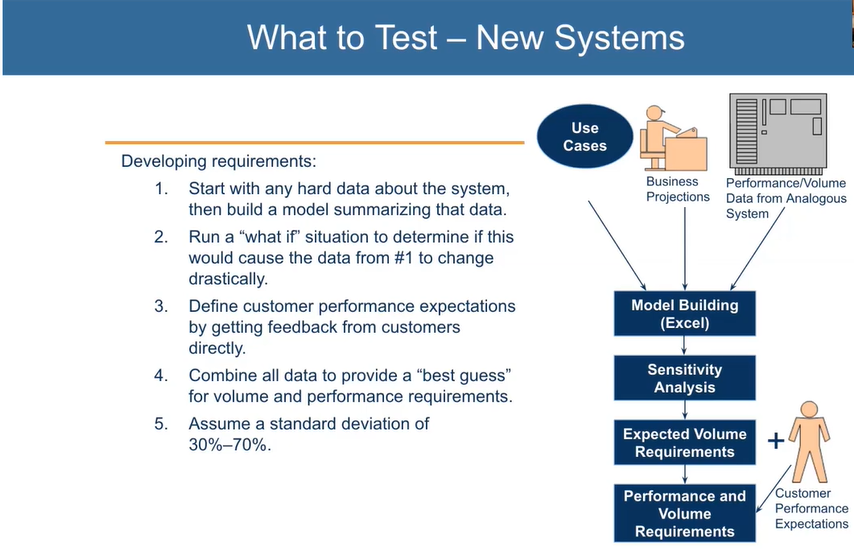

In the absence of existing records, Scott Moore advises starting load testing by leveraging available knowledge about the system and seeking insights from similar systems used by other companies. He encourages communication with business analysts to understand the high-level objectives and expectations for the application.

For systems with limited information, it is recommended to assume a bigger deviation (e.g., 70% instead of the typical 30%) when estimating user loads.

Business Process Prioritization

Scott Moore suggests a practical approach to streamline load testing by applying the 80-20 rule. Focus on critical business processes that, if impacted under load, pose the most significant risks. By prioritizing tasks based on their impact on transactions and considering backend costs, a prioritized list of essential test scenarios can be generated. The scope of testing should align with available time, allowing for efficient and strategic load testing. This approach aims to identify key scenarios and make informed decisions about what to include or exclude from the testing process.

Concurrency

Scott Moore discusses three levels of concurrency in load testing: application level, business process level, and transactional level.

Don’t assume the need for the maximum user license based solely on the total user count, you must understand the concurrency levels. Application-level concurrency considers how many users are active on the system at any given time. The business process level looks at how many users engage in specific processes simultaneously. The transactional level involves the exact timing of user actions, like logging in at the same time.

Every Tier is a Potential Bottleneck

In load testing, identifying bottlenecks involves examining both the left-hand side (client perspective) and the right-hand side (server-side infrastructure and application stack). While the left side may have limited control due to diverse user devices, the right side encompasses various components that can be sources of bottlenecks.

Monitor the minimum values of both infrastructure and application stack elements during load to correlate test results. Bottlenecks can originate from different sources, such as load balancer misconfigurations or untuned databases. Continuous monitoring is needed to trace transaction times through the entire tech stack, identifying where the system spends the most time.

Monitoring Best Practice

Start your monitoring at a high level and focus initially on key metrics: CPU, disk, memory, and network. Use specialized monitoring tools for database-specific metrics and leveraging observability products. It is important to identify patterns in monitoring data, adjusting as needed during the testing process. Collaboration within the team is crucial here, and having consolidated access to monitoring data during testing is a powerful and often underutilized technology.

Scott Moore discusses typical monitors for load testing, highlighting perfmon for Windows systems and various tools like iostat and vmstat for Unix/Linux. He notes the availability of observability tools for web servers, database servers, and network traces, suggesting their use in testing environments. Scott emphasizes the importance of shifting observability left in the development process.

Data Issues That Hinder Test Schedule

The data should be handled effectively in load testing, particularly when dealing with third-party data. There are common issues such as insufficient user accounts, wrong permissions, and errors caused by sporadic data inconsistencies. Ensuring thorough data file verification, creating sufficient test data, and aligning the test environment with production are crucial considerations. The focus is on maintaining data integrity by addressing challenges in restoring databases to their original state after load testing. The advice also includes protecting sensitive information, employing service virtualization for third-party calls, and accounting for delays to enhance realism in testing scenarios.

Types of Load Tests

Scott Moore introduces a comprehensive methodology for load testing, emphasizing the significance of realistic business processes. The methodology includes a baseline test for a single user, a top-time transaction test at 20% capacity, a benchmark test for 100% capacity, a scalability test at 120%, a volume or soak test for 8-10 hours, and a stress test to identify the first point of failure.

Reporting is very important here. Scott suggests both agile, dashboard-style reporting and more integrated, actionable analysis for executive understanding. Don’t forget to use the storytelling aspect of performance analysis to communicate the value of load testing effectively. The true value lies in the analysis and not just the creation of scripts or automation. The analysis should be presented in a way that aids business decision-making, acknowledging that results have a lifespan and may need reevaluation with application changes.

About Scott:

With over 30 years of IT experience with various platforms and technologies, Scott is an active writer, speaker, influencer, and the host of multiple online video series. This includes “The Performance Tour”, “DevOps Driving”, “The Security Champions”, and the SMC Journal podcast. He helps clients address complex issues concerning software engineering, performance, digital experience, Observability, DevSecOps and AIOps.

Learn more about Scott here.

Connect with Scott on LinkedIn.