In today's world, whether you're on social media or diving into the tech universe, one term that resonates consistently is AI. It is not just technology; it's the art of empowering machines to think, learn, and innovate—ushering in a new era of boundless possibilities.

In every domain, improving quality is crucial, and to achieve this, we conduct end-to-end testing, addressing all aspects of the testing cycle. In this post, we'll explore how AI can assist us in testing, enhancing product quality with smart strategies. But before we plunge into the exciting exploration of Quality Assurance and analyse how AI transforms End-to-End Testing.

Basics of AI, ML, and Generative AI

Before we dive into discussing the use of AI in testing, let's explore some complex terms that form the basis of AI. People often mix up terms like AI, ML, Generative AI, Code Generation, and LLMs, causing confusion. So, let's take a moment to clear up this confusion and untangle these concepts.

Each term has its own superpower, playing a unique role in the tech story. Understanding them is like having a treasure map to unlock the wonders of AI in testing. So, grab your curiosity, and let's embark on a journey to demystify these terms and witness the magic they bring to the world of Quality Assurance!

AI (Artificial Intelligence) & ML

It's a veritably large field where we develop intelligent systems that can perform tasks that generally bear human intelligence. Machine learning( ML) is a subset of AI that focuses on algorithms and models that enable computers to learn from and make prognostications or opinions grounded on data.

Generative AI

Generative AI is a cool part of AI that makes new stuff like text, images, or code using smart learning tricks. Our favourite generative AI, ChatGPT, is a star we all know about. It includes different smart models, like Language Models (LLMs), and other cool types such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs).

Code Generation

These tools are like smart programs that use AI tricks, especially generative AI, to make code bits, functions, or even whole programs without you doing much. They learn from existing code and create new code that works similarly. The cool thing is, they can be friends with your computer system (IDEs) to make coding even easier.

LLMs (Large Language Models)

These are smart AI things that are really good at making human-like text. They're called Large Language Models (LLMs). Think of them as super-smart models trained on tons of text, and we can tweak them to do different jobs, like creating code. One example of an LLM model is GPT-3, which can help write code or make documents sound great.

In summary, AI is the overarching field that encompasses various subfields, including ML and generative AI. Generative AI includes techniques like LLMs and GANs, which can be used for code generation. Code Generation tools leverage generative AI to automatically create code snippets. All these components are interconnected and contribute to the advancement of AI-driven capabilities, including code generation using generative AI techniques like LLMs.

In the upcoming part, let's explore how AI comes to the rescue at various stages of the testing journey. Whether it's the initial unit testing, the middle-ground integration testing, or the broader system testing, AI steps in to lend a helping hand. We'll uncover the unique ways AI contributes to each testing phase, making the entire testing process more efficient and effective.

Transforming Unit and Integration Testing with AI Powered Code Generation Tools

The current methodology of writing unit and integration tests faces several drawbacks, leading to potential gaps in test coverage. One significant limitation is the challenge of achieving comprehensive coverage, especially when teams are constrained by tight project timelines or limited bandwidth.

The rush to meet deadlines often results in either the omission of certain unit tests or a lack of thorough coverage in integration testing. This, in turn, leaves the software susceptible to undetected defects and vulnerabilities. However, the integration of AI in collaboration with engineers proves to be a game-changer. AI-driven tools, when coupled with coverage analysis tools like SonarQube, work synergistically to identify areas with insufficient test coverage.

By understanding the application's context and behaviour, AI assists engineers in automatically generating relevant unit and integration tests, ensuring a more robust and complete testing suite. This collaboration mitigates the drawbacks of the conventional approach and empowers teams to achieve higher test coverage and, consequently, deliver more reliable and resilient software products.

Creating Unit Tests Using Code Generation Tool

Creating unit tests is a crucial aspect of ensuring the reliability and functionality of software components. Code generation tools, such as GitHub Copilot and CodeWhisperer, revolutionise the creation of unit tests by automating the generation of test code based on contextual understanding. These tools analyse the codebase and context, intelligently suggesting and generating unit test cases that verify the correctness of functions and methods. This not only accelerates the unit testing process but also promotes consistency in test coverage. With the assistance of code generation tools, developers can efficiently create robust unit tests, reducing the likelihood of bugs and ensuring the maintainability of codebases throughout the software development lifecycle.

EXAMPLE:

Let's attempt to write unit tests for a function that calculates the sum of two numbers. To achieve this, you have two options: either utilise Copilot, which can be seamlessly integrated into IDEs like IntelliJ, and then use prompts to generate all the unit tests, or employ the prompt feature in GPT. The recommended approach is to leverage code generation tools for an efficient and convenient process.

The prompt plays a crucial role when using Generative AI. By employing a prompt such as "Write a JUnit test for a function that calculates the sum of two numbers with maximum coverage," we can swiftly generate a comprehensive list of unit tests within seconds. The screenshot below showcases the response to the aforementioned prompt:

How to use AI to write Integration Tests

AI makes testing easier by helping create integration tests, especially when using mocks. Imagine an e-commerce website with various services like user authentication, inventory management, and payment processing. Writing tests for these services manually is tough and time-consuming. But with AI tools, developers can use smart algorithms to understand how the system works, find possible test points, and create test scenarios automatically. Including mocks makes testing even better by simulating how external services behave. This AI-powered approach not only speeds up testing but also ensures thorough and efficient checks for complex services in a dynamic software setup.

Functional TestCase Generation Using AI

Problem Statement

The bottleneck in traditional test case creation arises from its manual and time-intensive nature. Testers often face challenges in keeping pace with rapidly evolving software, leading to limited test coverage and potentially overlooking critical scenarios. The need for AI intervention becomes evident as it offers a solution to overcome these bottlenecks by automating the test case generation process. With AI's ability to adapt to changing software landscapes and generate diverse test cases efficiently, it becomes a crucial ally in addressing the limitations of the traditional approach.

How to create Test Case with Generative AI

Creating test cases with Generative AI is like a big change in how we test software. Using smart machine learning tools, Generative AI can make different and detailed test cases automatically. It looks at how the application works, what the user does, and the main features, using all this to make clever test cases that go beyond the usual ones we write. The cool thing is, it can adjust to changes in the software, so our tests keep working as things evolve. This helps testers cover more ground, find tricky issues, and make testing smoother, making the software better overall.

Test Data Generation with AI

AI-based test data generation makes things easier by automatically creating diverse and realistic datasets for testing. It's like having a smart assistant that understands your software and generates data that covers a wide range of scenarios. This is much better than the traditional way of manually creating data, which takes a lot of time, can have errors, and may not cover all possible situations. With AI, testing becomes more efficient, accurate, and thorough, solving the problems we face with manual data creation in the world of software testing.

Generate TestData using GPT

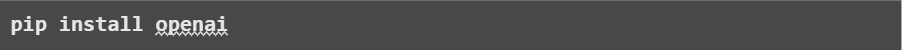

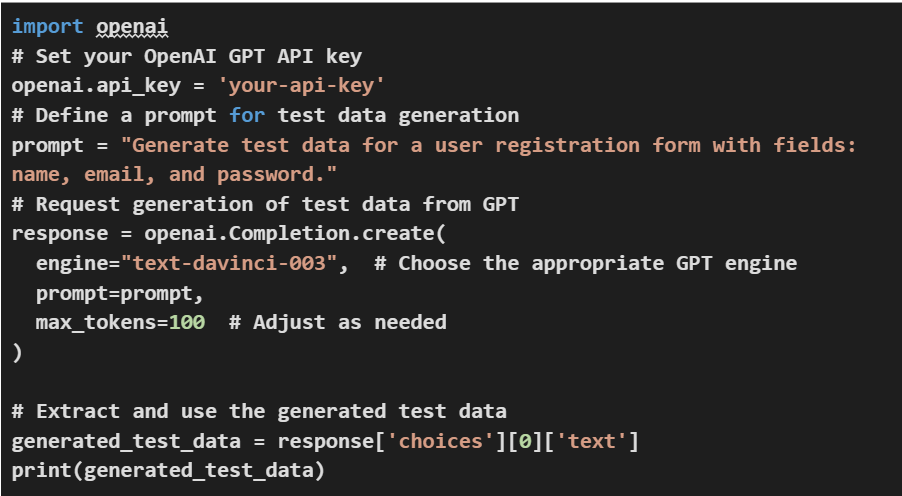

Using GPT (Generative Pre-trained Transformer) programmatically to generate test data at runtime with Python involves several steps. First, you need to have the OpenAI GPT API key. Once you have the key, you can use the OpenAI GPT Python library to interact with the API. Begin by installing the library using pip:

Then, you can utilise the OpenAI API in your Python script to generate test data dynamically. Below is a simple example[this code is just for reference]:

This script sends a prompt to the GPT engine and retrieves the generated text as test data. Adjust the prompt and parameters according to your specific requirements. Make sure to handle the API key securely, and be aware of any usage limitations imposed by the OpenAI platform.

AI-Powered Test Automation Tool

Pain Points in Existing Test Automation Tools:

Traditional test automation tools often struggle with the dynamic nature of modern applications. They face challenges in adapting to frequent changes in the user interface, making test scripts brittle and prone to frequent updates. Maintenance becomes a significant pain point as even minor changes in the application can lead to cascading updates in multiple test scripts. Additionally, handling asynchronous operations, dynamic data, and complex workflows proves challenging, leading to incomplete test coverage and unreliable results.

Solution and Impact with AI-Powered Test Automation:

AI-powered test automation tools offer a transformative solution to these challenges. By leveraging machine learning algorithms, these tools can intelligently adapt to changes in the application's UI, making scripts more resilient.

They excel in handling dynamic elements, asynchronous operations, and evolving workflows, reducing script maintenance efforts. With advanced capabilities such as self-healing tests and intelligent element locators, AI-powered tools significantly enhance the robustness and reliability of automated testing. This not only addresses the pain points of traditional tools but also contributes to increased efficiency, faster test execution, and more accurate results, ultimately improving the overall quality of the software.

One of the prominent AI powered codeless automation tools is Testim, it is the fastest way to resilient end-to-end tests, your way—in code, codeless, or both.

AI For Self Healing Tests

Challenges Faced

Test automation maintenance often presents challenges in adapting to evolving application changes. When there are updates or modifications in the application interface, elements, or workflows, existing test scripts can break, requiring manual intervention for adjustments. Additionally, maintaining synchronisation between test scripts and application changes becomes cumbersome, leading to time-consuming efforts in script maintenance. The lack of dynamic adaptability and the need for continuous manual updates contribute to increased maintenance overhead, impacting overall efficiency.

Solution Powered with AI

AI introduces a transformative solution to these maintenance challenges through the concept of self-healing tests. By leveraging AI algorithms and machine learning models, test scripts can autonomously adapt to changes in the application. For instance, an AI-powered testing framework can analyse application updates, identify modifications impacting test scripts, and automatically adjust script elements accordingly. This proactive approach ensures that the test scripts evolve in sync with the application changes, reducing manual intervention and significantly enhancing the resilience of the test suite. The result is a more robust and sustainable test automation framework that can withstand application changes with minimal human intervention.

Illustration Through Scenarios

One of the widely used self healing open source solutions is Healenium . It employs machine learning (ML) algorithms to revolutionise test automation by introducing self-healing capabilities. When a test script encounters a failure due to changes in the application, Healenium's ML algorithms analyse the failure patterns and dynamically update the script to adapt to the modifications.Healenium goes beyond mere adaptation to UI changes; it also handles NoSuchElement test failures dynamically.

When a test encounters a failed control due to an element not being found, Healenium steps in and replaces the failed control with a new value that aligns with the best matching element, ensuring a seamless continuation of the test. This intelligent response not only resolves the test failure but also allows the script to perform the intended action successfully with the replaced control. By proactively addressing NoSuchElement errors, Healenium contributes to more resilient and self-healing test automation, providing an efficient solution for common challenges in maintaining test scripts. Point to note is Healenium will work only with Java + Selenium-based test automation framework.

Effective Debugging in Automation Using LLM Models

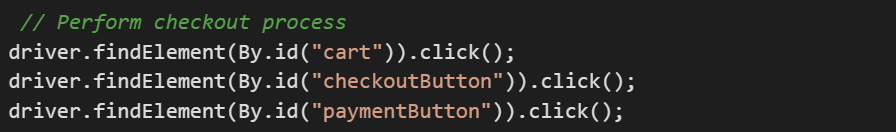

Imagine you encounter a scenario where a Selenium test case fails, and you're having difficulty identifying the root cause. The test involves navigating through an e-commerce application, adding items to the cart, and completing the checkout process. The failure occurs during the checkout, but the specific cause is not evident from the error message.

Using LLM Models for Analysis

In this situation, you can leverage Large Language Models (LLMs) to analyze the error message and gain insights into potential issues. You decide to use GPT (Generative Pre-trained Transformer) to assist in understanding the error and refining your test script.

Example:

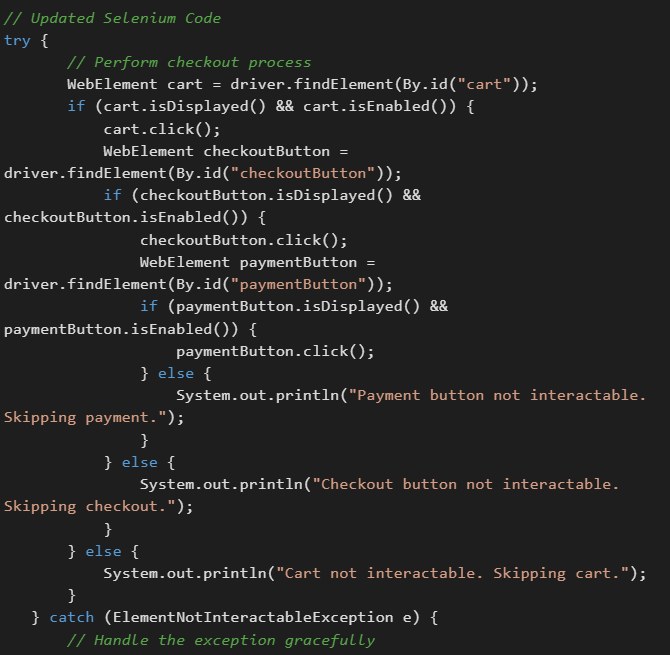

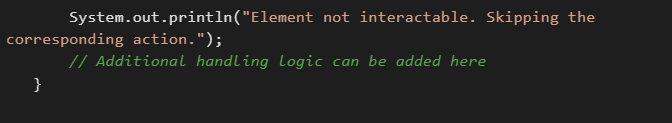

Original Selenium Code:

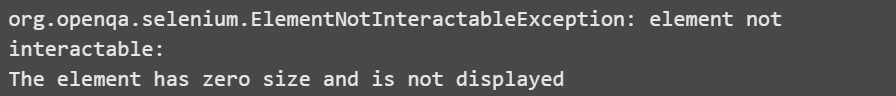

Error Message:

Using LLM for Analysis:

Prompt: "Analyze the error message 'ElementNotInteractableException: element not interactable' in Selenium. Suggest possible reasons for this exception and provide code adjustments to handle the scenario effectively."

Generated Response:

Explanation:

The generated code includes additional checks to ensure the elements are interactable before performing actions. If an element is not interactable, the script gracefully handles the situation, printing a message and skipping the corresponding action. This approach allows for more effective debugging and prevents the test from failing abruptly, providing better insights into the issues encountered during automation.

Key Precautions When Using AI

While there are numerous benefits to using AI, it's essential to ensure that testing has huge impacts on both user experience and data. Therefore, we must be mindful and take extra precautions when employing AI in testing activities.

Data Privacy and Security:

- Precaution: Ensure that sensitive and confidential data used during testing is adequately protected, and compliance with data privacy regulations is maintained.

- Example: If your testing involves real customer data, implement data anonymization techniques to replace sensitive information with dummy data. This minimises the risk of exposing personal details during testing.

Scalability and Resource Management:

- Precaution: Assess the scalability of AI-powered testing tools to handle increasing workloads, and manage resources efficiently to prevent bottlenecks or system failures.

- Example: If employing AI for load testing, simulate scenarios with increasing numbers of virtual users to ensure the tool can handle the expected load. Implement resource scaling strategies to accommodate variations in workload.

Bias in AI Models:

- Precaution: Be vigilant about bias in AI models, especially when using machine learning algorithms, and strive for fairness and inclusivity in testing scenarios.

- Example: In a hiring application testing scenario, verify that the AI-driven resume screening tool does not exhibit biases towards specific demographics. Regularly review and adjust the algorithm to reduce bias.

Conclusion

In the tech world, AI empowers innovation and quality through end-to-end testing. This blog demystifies AI terms, highlighting its role in QA. AI doesn't replace QA jobs but enhances testing precision, ensuring reliable and superior software quality. Tools like GitHub Copilot redefine unit and integration testing, streamlining processes. AI's impact extends to test automation, with self-healing tests and adaptability. In debugging, Large Language Models like GPT provide valuable insights, fostering resilience in testing. The AI-testing synergy transforms software quality, promising smarter, efficient practices for the future.

In conclusion, the synergy between AI and testing is a transformative force, elevating software quality standards. The future promises smarter, more efficient, and more reliable testing practices through seamless integration with AI.

About author:

Sidharth Shukla has around 13+ years of experience in the software field, mostly focused on automation, SDET, and DevOps roles. Currently, he is associated with Amazon Canada as an SDET2. Additionally, Sidharth has been awarded the LinkedIn Top Voice badge and featured on a Times Square billboard for his contributions to the QA community regarding career aspects. If you want to know more about Sidharth Shukla, you can check out his LinkedIn, Topmate profile and explore my Medium articles.