Does your company write an API for its software? If the answer is yes, then you absolutely need to test it — and fortunately for you, this tutorial explains step-by-step how to conduct automated API testing using tools like Postman, Newman, Jenkins and Tricentis qTest.

But first, let’s take a lay of the land. It turns out your software’s API is actually the most important part of the application that you can test because it has the highest security risks.

For example, the browser or application that houses the client side software can prevent a lot of poor user experiences, such as sending 100 character user names or allowing for weird encoded character inputs, but does your API prevent those things too? And if someone starts guessing other users’ “unique” tokens, does the software respond with real data? Does it have an Apache error message that includes the version of services running? If the answers to any of those questions were yes, there is a pretty big security flaw. Or what if someone were to hack the API? They could get production data, they could Bitcoin ransom the servers or they could hide on the machine until there something interesting happens. The bottom line is, the stakes when using an API are much higher than if there is just a bug in the UI of your application — your data could be at risk and, by proxy, all of your users’ data.

Fortunately, API testing is not only the most vital testing to be done against your application, but it is also the easiest and quickest to execute. That means there’s no reason you shouldn’t have an extensive API test suite (and trust me, having one will help you sleep much better at night). With an API test suite in place with your Continuous Integration you can easily:

- Test all of your endpoints no matter where they are hosted, from AWS Lambda to your local machine.

- Ensure all of your services are running as expected.

- Confirm that all of your endpoints are secured from unauthorized AND unauthenticated users.

- Watch your test executions “magically” populate in your test management tool.

So how do you actually put all of this into action? You’ve come to the right place. Read on for a step-by-step API testing tutorial on how to set up Postman and Newman, how to execute your tests from Jenkins and finally how to integrate all of those test results into a test management tool like qTest Manager.

Let’s get started!

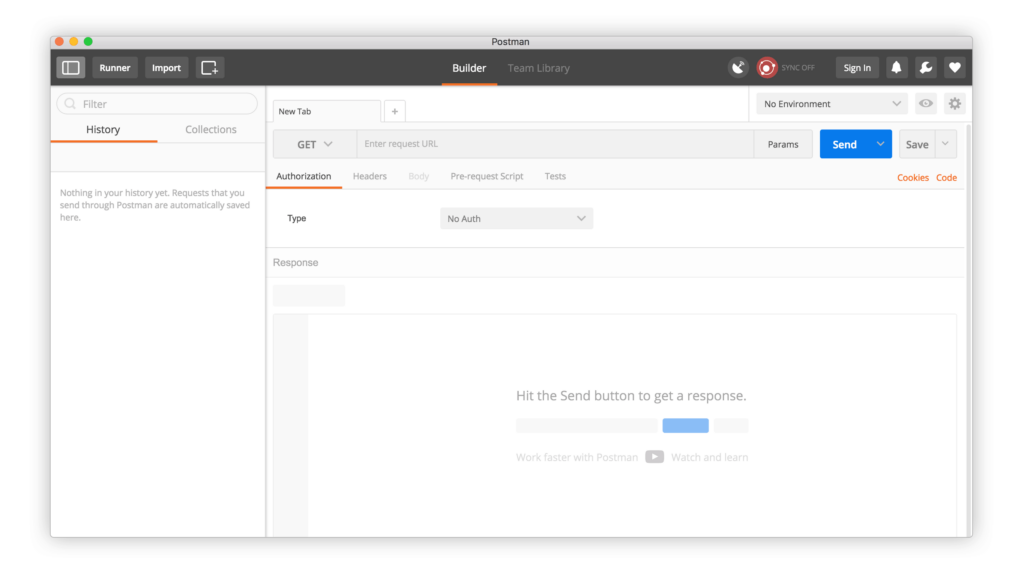

Set up Postman

1) First thing’s first: You need to download Postman. It’s free, it’s fun and it works on Mac, Windows and Linux machines. Note: If you have a larger team and you update your services and tests frequently, you may want to consider Postman Pro (but you can always decide to upgrade later).

2) Make sure you have the API documentation for your application handy. For this demo, I’m going to use the Tricentis qTest Manager API as it’s straightforward and public. If you’d like to try these demos out verbatim, you can get a trial of Tricentis qTest Manager for free here. There are also tons of APIs available online that you can use (I recommend starting with AnyAPI if you’re looking elsewhere).

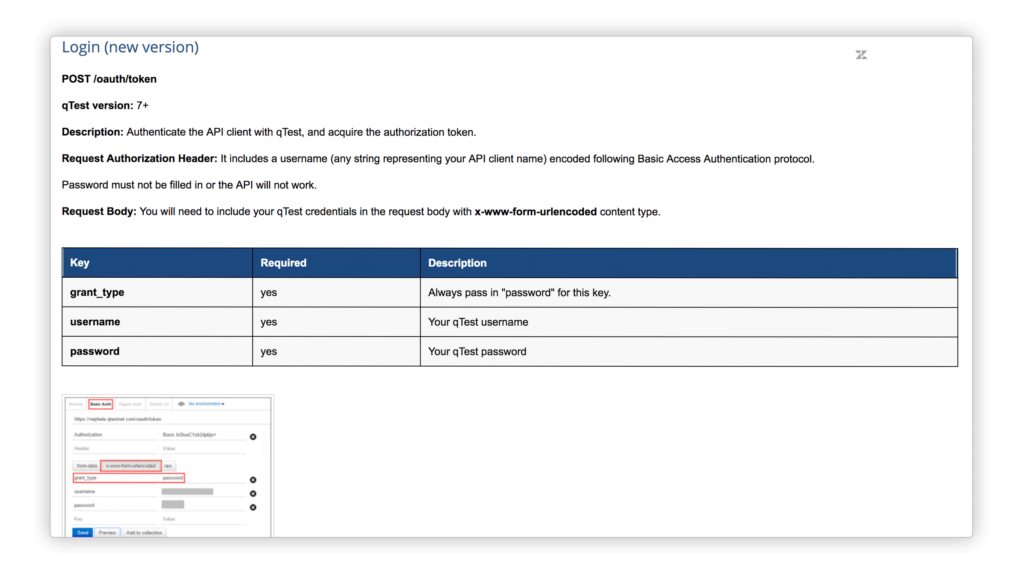

3) Next, pull up the documentation for the login call for the API you’re using (you can find the documentation for Tricentis qTest Manager below). This documentation should include:

- Method (POST/PUT/GET/DELETE/etc)

- URI (/oauth/token which will follow the URL for the instance of qTest you’re using)

- Headers

- Body

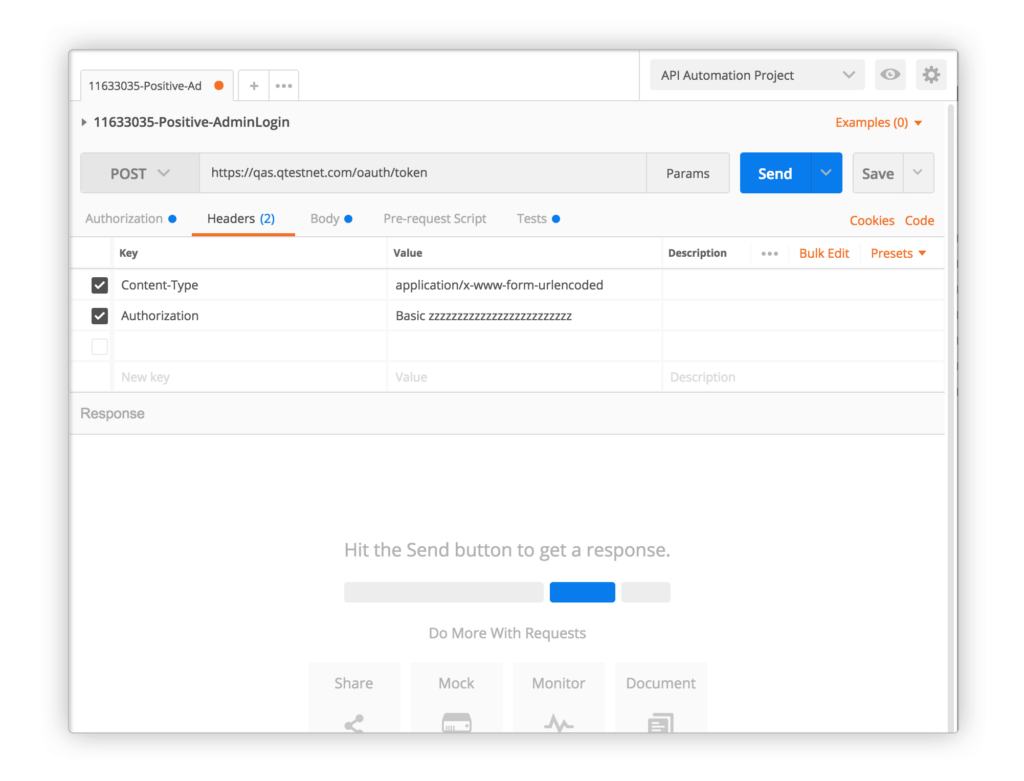

In this example, the login call requires the x-www-form-urlencoded Content-Type Header. You can simply select it in Postman and it will automatically add the appropriate Header. Once you select this option, Postman will allow you to enter name/value pairs for grant type, username and password. Note: Any time you make a call, ensure your web protocol is HTTPS, otherwise all of the data you’re passing over the internet is in clear text, and nobody wants that.

4) If you’re using Tricentis qTest Manager, go ahead and structure your tests and write out what it is that you want to test in a test case. When we’re done, we will link the test case to the automated API test by mapping the test case ID. In general, writing out what the test should do first in your test case management tool is a great process for writing automated test cases.

5) Once you structure your tests and write what you want your test cases to do, link that work to your requirements for full traceability and then hook your automated test executions up to that test case. If you’re using a tool like qTest Manager that links to JIRA, you’ll see all your text executions in JIRA for every matching requirement. Cool, eh?

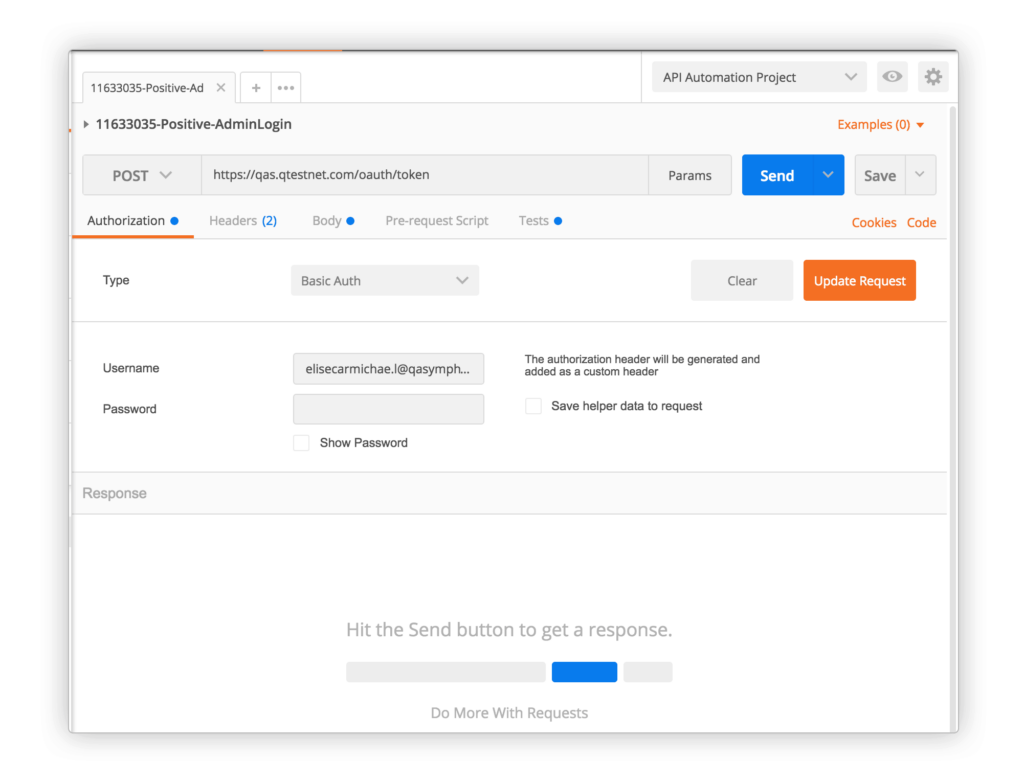

6) Now let’s make our first call to the login endpoint so that we can get a token (we’ll later pass the token to subsequent calls so the API knows that we are logged in). Looking at the login documentation, I see this is a POST request. If it were a GET, you’d be passing in your username and password through a URL. To ensure everything runs smoothly, make sure you have the following settings:

- Method: Post

- URL: your http://your.qTestURL/oauth/token

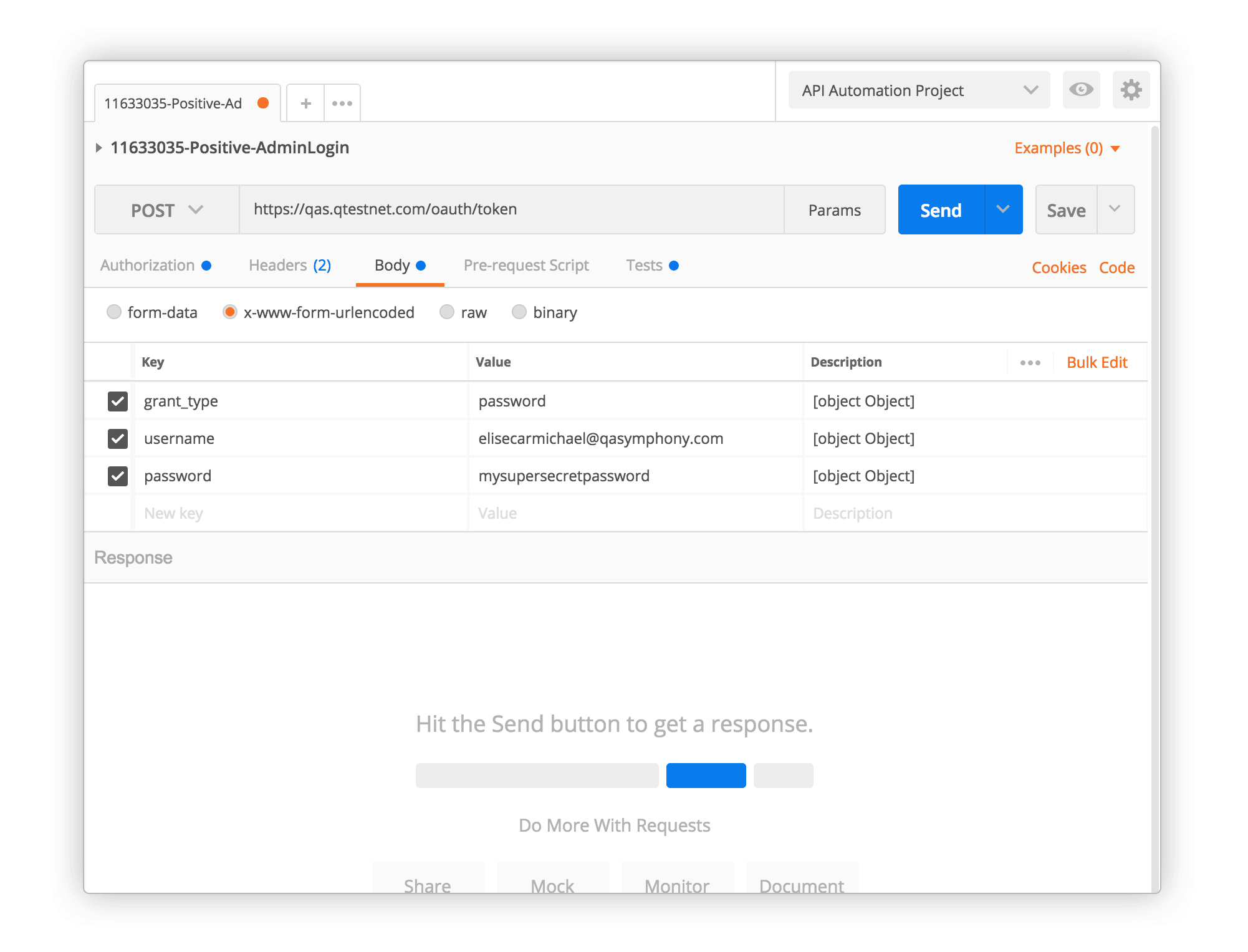

- Click on the Body tab and set the request body to x-www-form-urlencoded (these are just different standard ways to pass data in the body of your HTTP request) – and clicking the radio button just sets an HTTP header field Content-Type to be application/x-www-form-urlencoded.

- Now set 3 name/value in the Body:

- grant_type : password

- username : your qTest username

- password : your qTest password

Note the command in the API documentation regarding the password NOT being filled in, in the name/password area on in the fields Postman provides.

7) Do your settings match? Great! Now you have a working API call. Let’s save it into a Postman Collection so we can reuse it later. To create a new Postman Collection, just tap the folder icon, the plus, in the left panel.

Once you’ve created the collection, you can save your call by clicking the “Save” button on the top right of the screen (your standard OS shortcut works as well). I named my API call with the test case ID from qTest. This will later allow me to map my test case so that I can track every time I run this API call along with the rest of my tests.

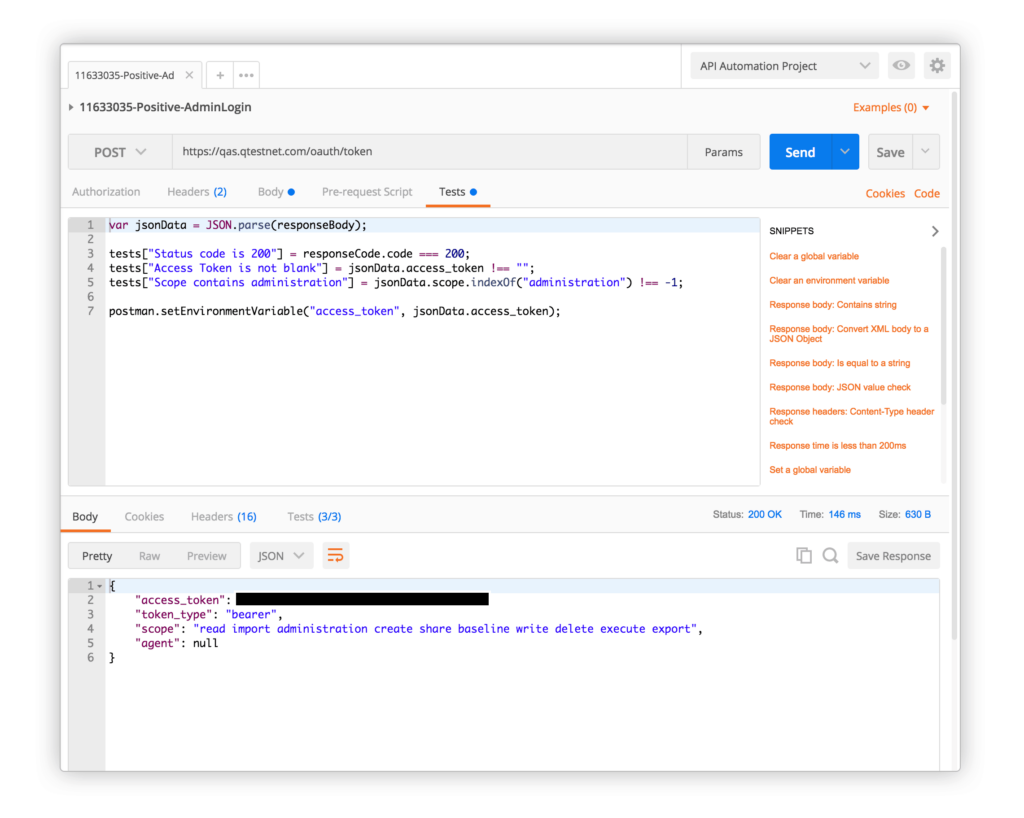

8) There is just one more step before we actually write a test, as we need to do a few things with the HTTP response:

- Verify the status code is 200 (OK)

- Verify that you get back a non-empty access token

- Verify that your scope is accurate

Note that we don’t care about the other fields – they are not important for you to test that you’re logged in.

9) Now it’s time to write the first tests! Be careful not to make your tests brittle – be smart about what you’re testing and why you’re testing it.

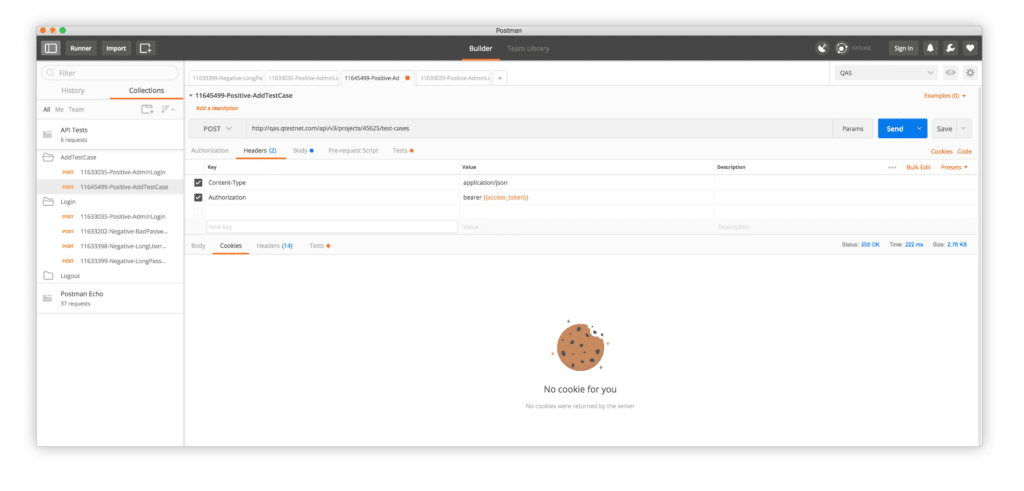

10) Next up, let’s write another test to add a test case into our existing project. First, we need to log in and store our token. We’ll create an environment variable and call it “access_token”:

The beauty of storing this access token is that you can now use it in your subsequent calls. This means you can automate your tests and you don’t need to manually get your login token every time. Excellent! In the next call, you will see the token used with double curly braces {{access_token}}.

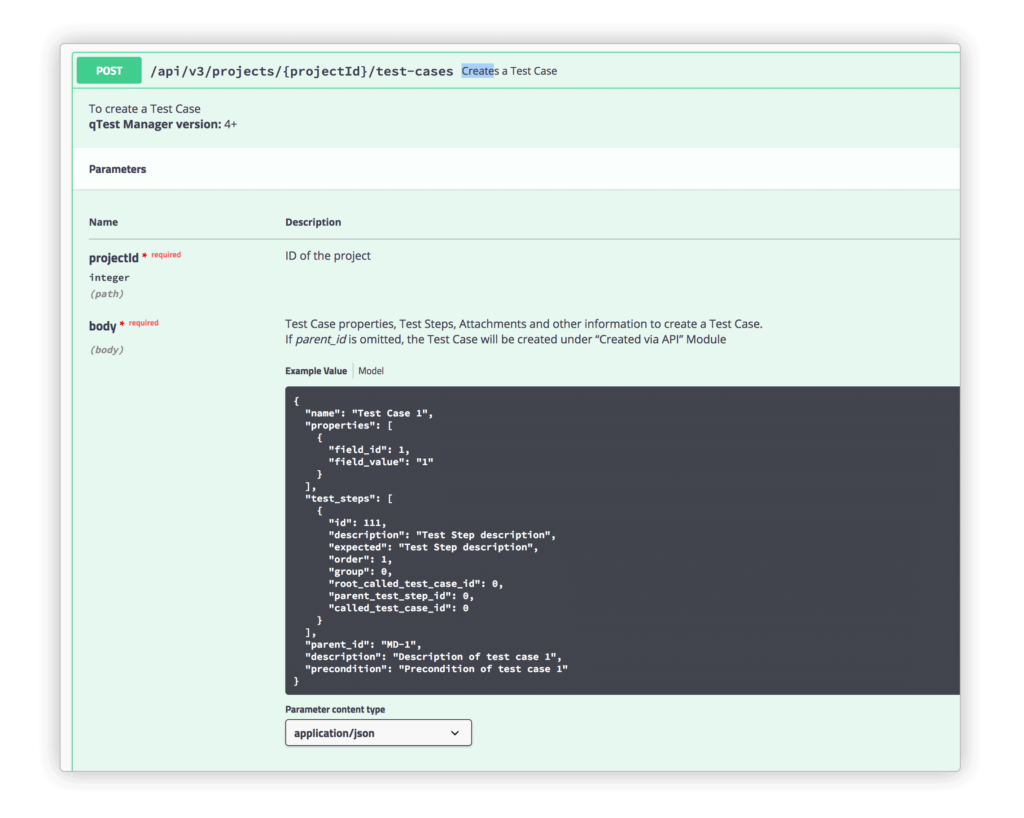

11) Let’s take a look at the documentation for adding a test case, which you can find here:

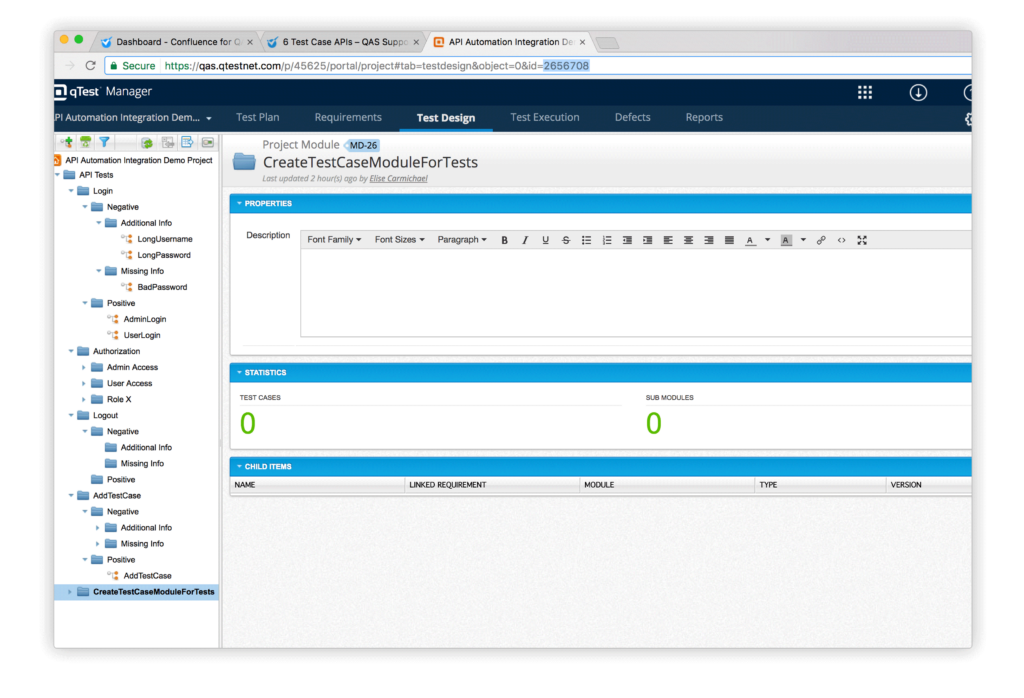

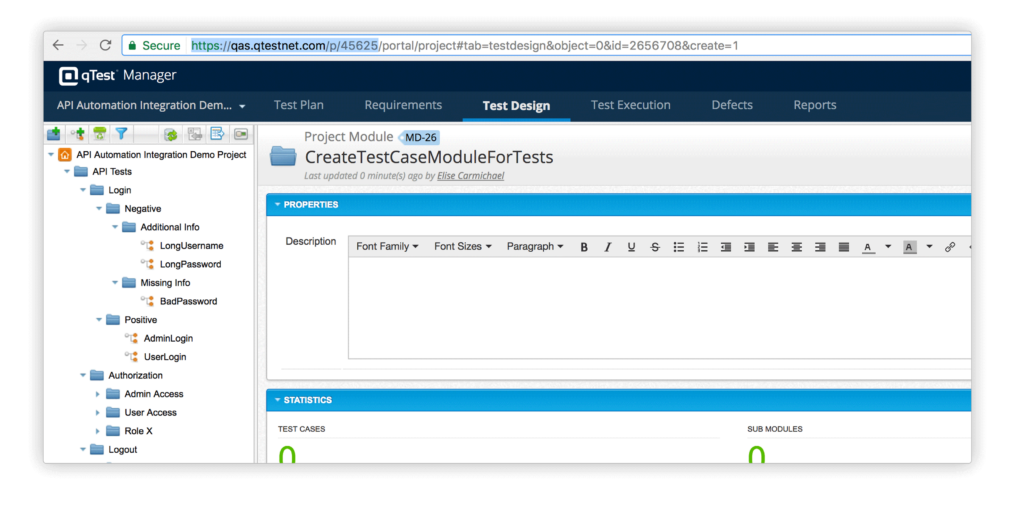

Then go ahead and create a place for your new test case to go:

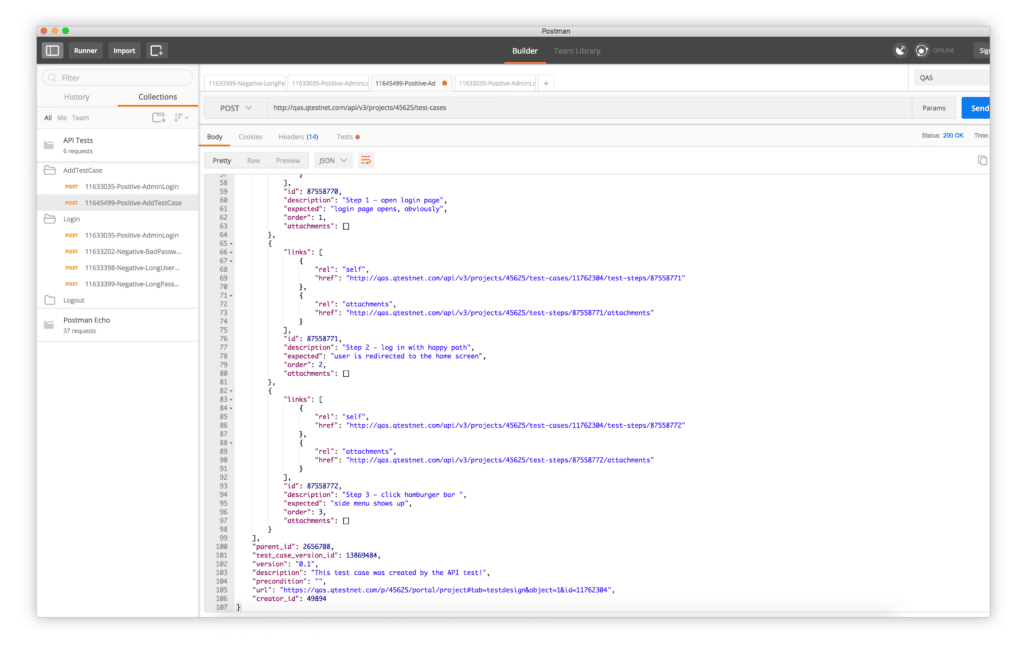

12) Now let’s create the new POST request for adding a test case. There is a variable in the URL (path) called {project}. To fill in this variable, we need to get the ID of the project in qTest, which we can get from the qTest URL. In this case, you can see it’s 45625:

We also need to fill out these strings in the fields:

- name: Testing Create Test Case

- description: This test case was created by the API test

- parent_id: 2656708

Note that the parent_id is the ID of the folder/module we just created for where these tests will get dumped. The ID can be found in the URL for that test module page.

13) Next we have to turn our attention to the two array properties we have. For test_steps, this will be a JSON array, which is a comma separated list of JSON objects in between two square braces. Each object is a step, and each JSON object within the array strings should be inside of quotation marks. Be careful not to copy in “pretty quotes” from a Microsoft Word document or other source that does additional beautification of your text.

Test_steps:

“test_steps” : [{

“description”: “Step 1 – open login page”,

“expected”: “login page opens, obviously”,

“attachments”: []

},

{

“description”: “Step 2 – log in with happy path”,

“expected”: “user is redirected to the home screen”,

“attachments”: []

},

{

“description”: “Step 3 – click hamburger bar “,

“expected”: “side menu shows up”,

“attachments”: []

}]

14) The final request headers use the token from the first call. As mentioned previously, we can use a saved variable with the double bracket notation {{ }}:

and the request body:

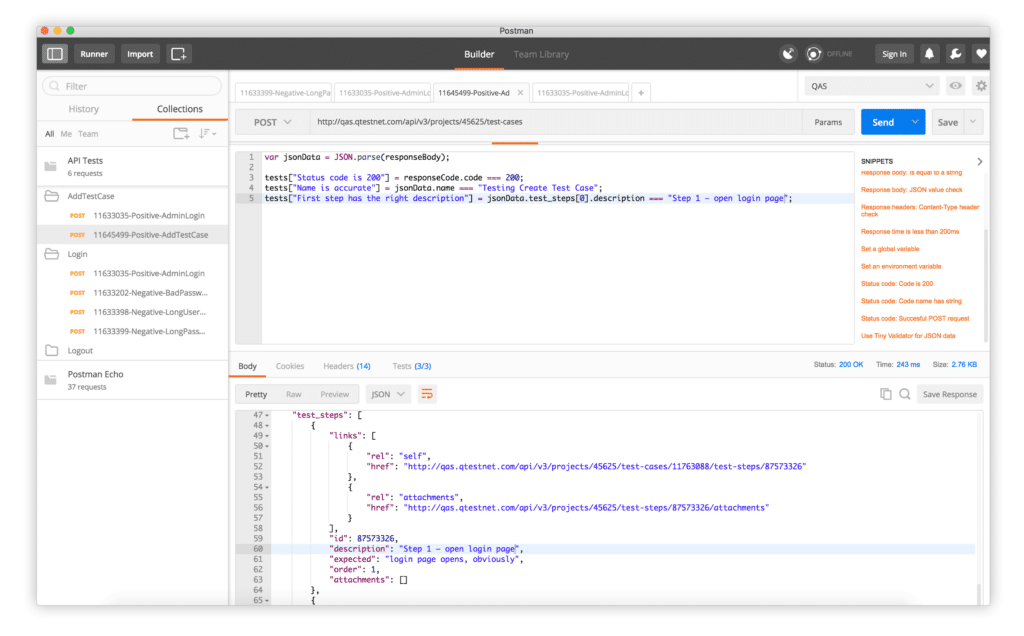

and then a few tests:

To verify the response, go into the test tab and make sure you get back the correct data.

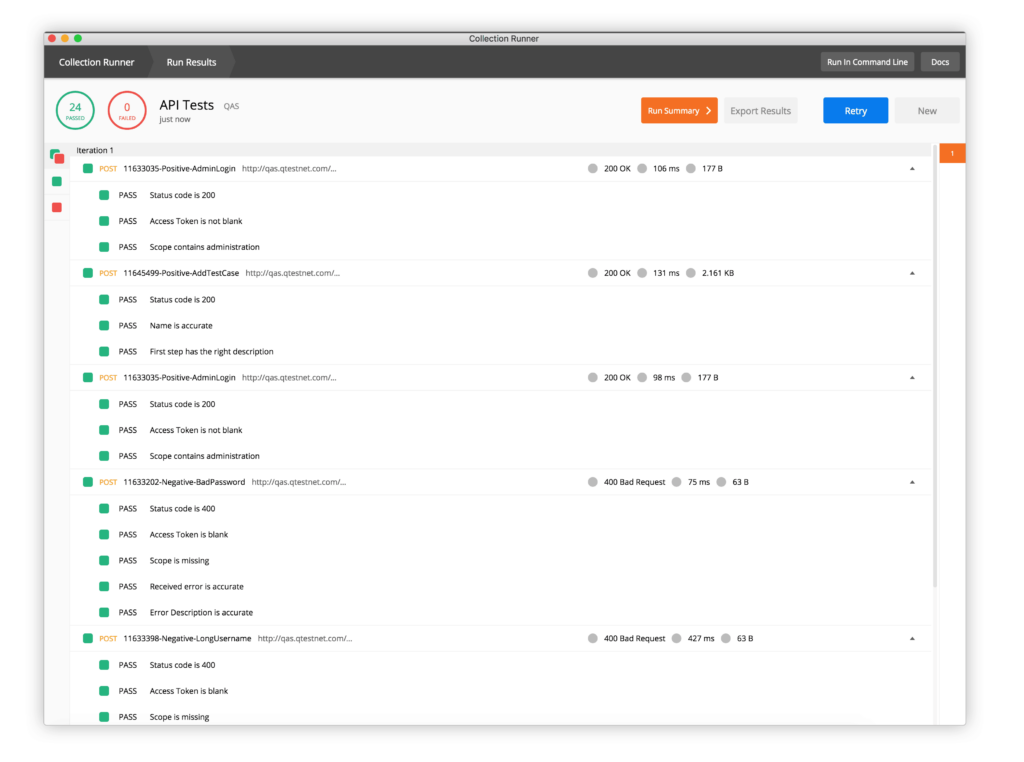

15) Now you can run the entire test suite or just subfolders at once using the “Runner.”

These are mostly happy paths, but there are quite a few things that could go wrong with these calls and dozens or hundreds of tests that you can do, including quite a few security tests. What if the project belongs to another customer? What if the module ID doesn’t exist? What if you upload a file that is massive? Write once, test every time!

One big item we didn’t touch on was storing in different environments. If you want to test on your development, QA, staging or production environments, you probably have different test data or logins you want to use for each environment. You can set this up and select the environment when running the test through the GUI (as we have been) or from the command line with Newman. Let’s do that next.

Set up Newman

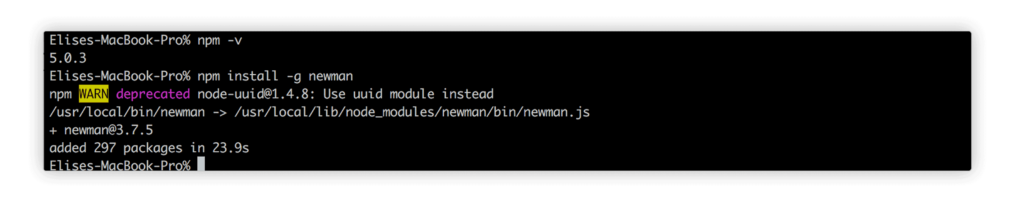

Now that you have a collection that you want to execute, and perhaps a corresponding environment configuration, you’ll want to run it from a command line. You must be able to do this in order to run it from Jenkins or any other continuous integration scheduler. To do so, I recommend Newman, which is an executable program for running Postman collections that’s written in Javascript and can be installed with the node package manager (NPM). It takes just a few short steps:

1) Open your terminal/command line application of choice: https://www.davidbaumgold.com/tutorials/command-line/

2) Install NPM: https://www.npmjs.com/get-npm

3) Install Newman globally on your machine: https://www.npmjs.com/package/newman/tutorial

4) Export your collection from Postman (just right click on the tests you want to export in the left pane) and export your environment (go to “Manage Environments” and hit the download button) from Postman. Save these on your machine where you are navigated in your terminal.

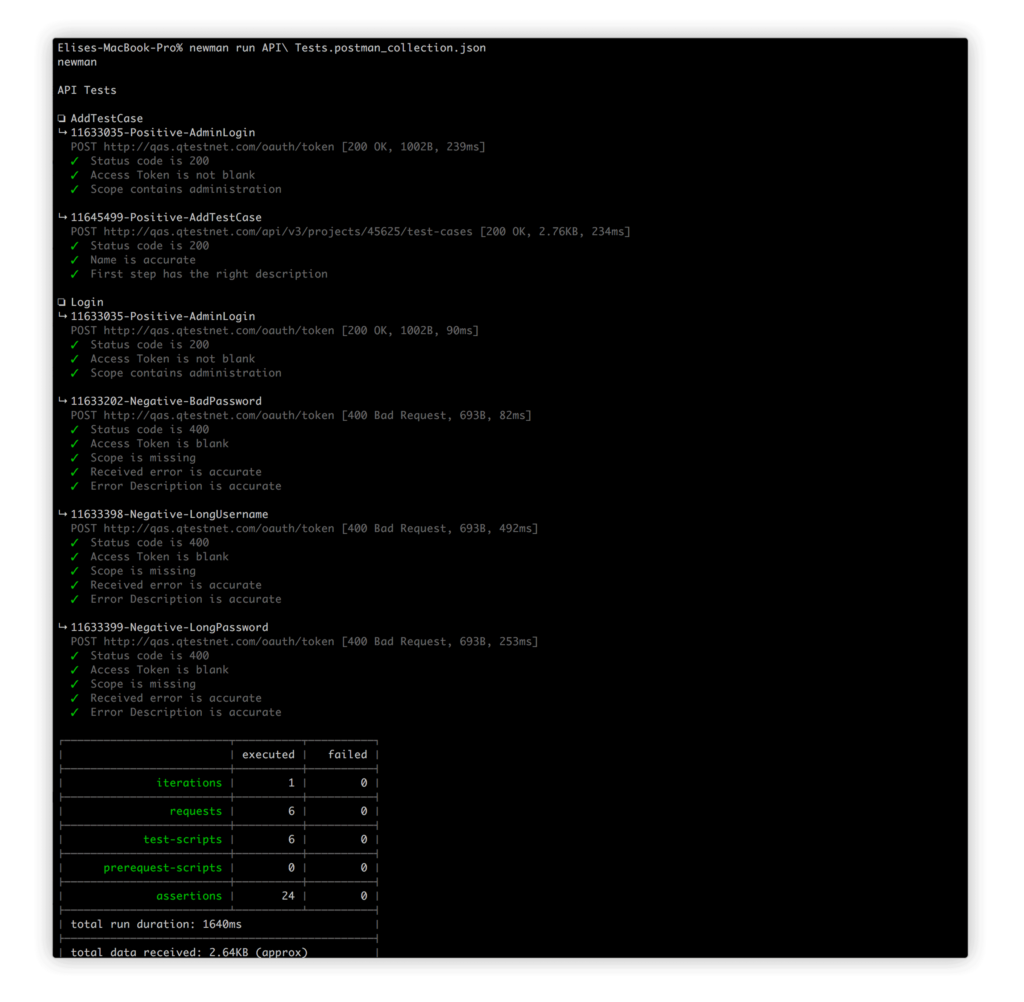

5) Once you’re in your terminal, there’s nothing left to do but to run your test! In this case, you don’t need any options or environment variables, so the command should just say:

`newman run path/to/my/exported/json/postman/collection.json`

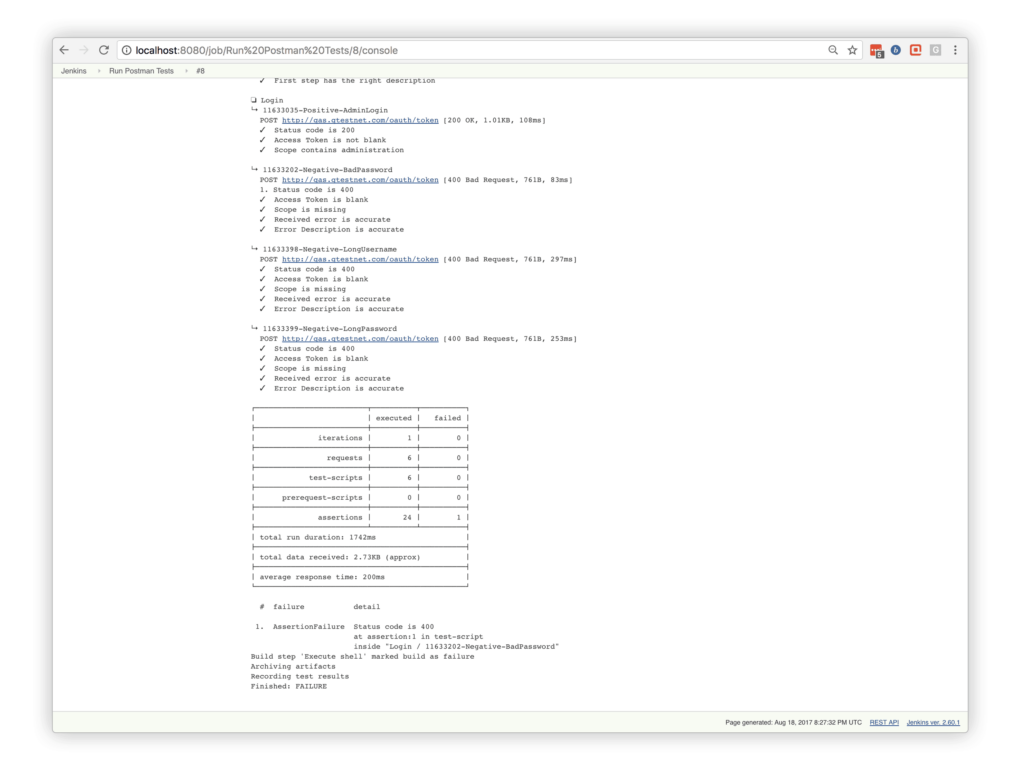

Looks pretty, right? Pretty is great, but not when you’re using Jenkins! Let’s use a more typical JUnit output that Jenkins can understand. Something like:

`newman run –reporters junit,json path/to/my/exported/json/postman/collection.json

This command actually produces two types of outputs: A standard, less descriptive JUnit as well as a highly descriptive .json file. Take a look at both — they should be created under a folder called “Newman” in your working directory (aka the directory from which you ran the Newman command).

We’re soon going to write a script to upload the test results to qTest and using the JUnit output will allow Jenkins to show built-in graphs and help the system pass or fail the build without any additional help.

While you can also upload results directly to qTest Manager using the JUnit results and the automation content, using the API provides more flexibility for how and where the test results appear within the tool.

Now that we have tests run from a command line, it’s time to get this put into our Jenkins job so it can be included as part of continuous integration. I recommend running this against your dev environment every time the developers push to the working branch.

Execute your tests from Jenkins

I won’t go into the setup of Jenkins, just the configuration of a job, but here is the download page if you want to try it locally.

If you don’t want to install Jenkins directly on your machine, you can install it with Docker. In case you do want to use Docker, you can get started by downloading the de facto Jenkins Docker instance and changing the Dockerfile to include node using the following node installation code found in the Docker/Jenkins Repository:

# Install Node

RUN curl -sL https://deb.nodesource.com/setup_4.x | bash

RUN apt-get -y install nodejs

RUN node -v

RUN npm -v

RUN npm install -g newman

From here, you will need to rebuild the Docker image and then start the container with the same instructions as in this GitHub ReadMe.

You should now have a fully working Jenkins instance installed locally. Great! Now back to the task at hand using the newly-installed instance of Jenkins:

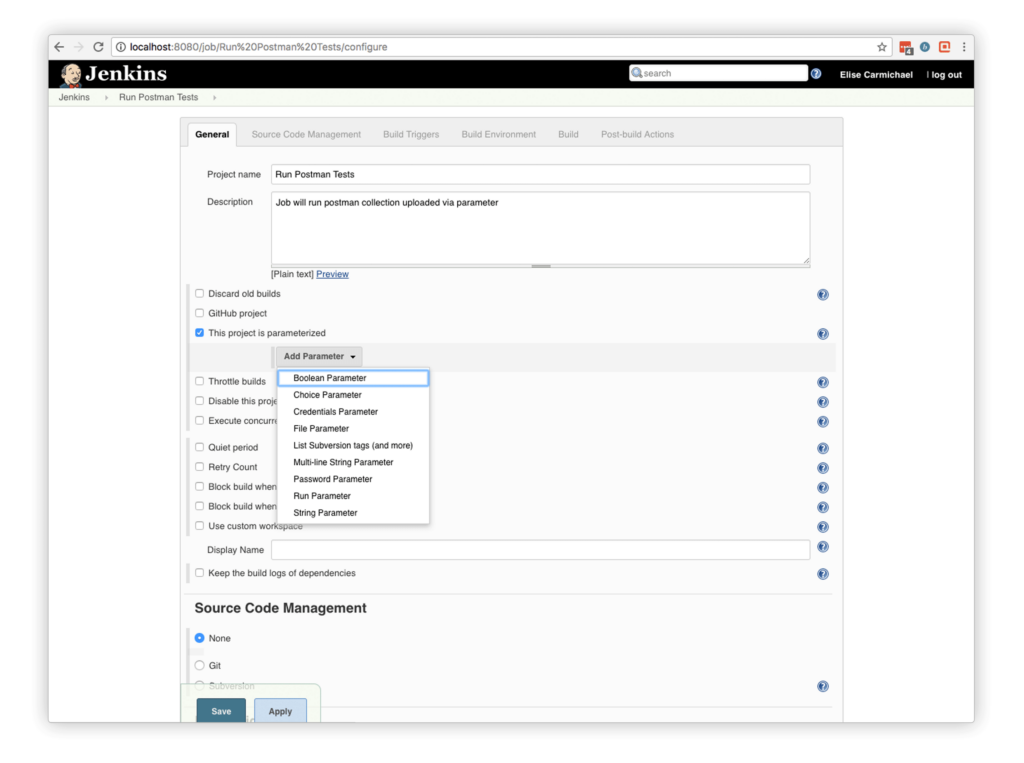

1) Create a new “Freestyle” type job in Jenkins.

In this case, we’ll set it up to allow you to upload the collection as a parameter. When you do this with your own projects, you should commit the Postman collection into whatever repository you’re using and pull directly from that repository to build by selecting “this project is parametrized” and then choosing “Add Parameter” with a “File Parameter.”

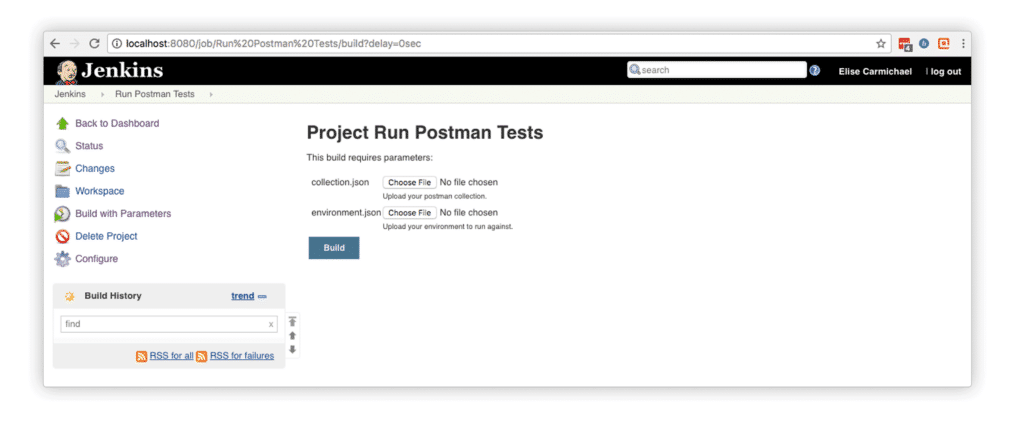

2) Select two file uploads – one for the collection and one for the environment.

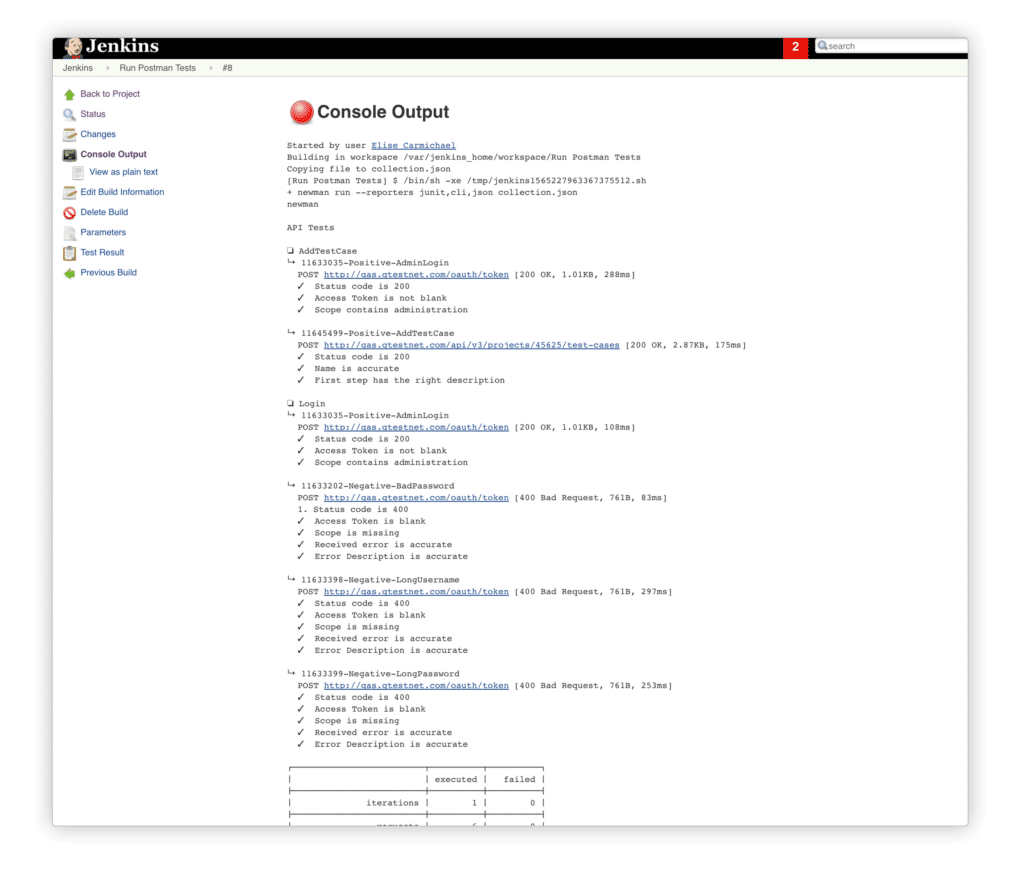

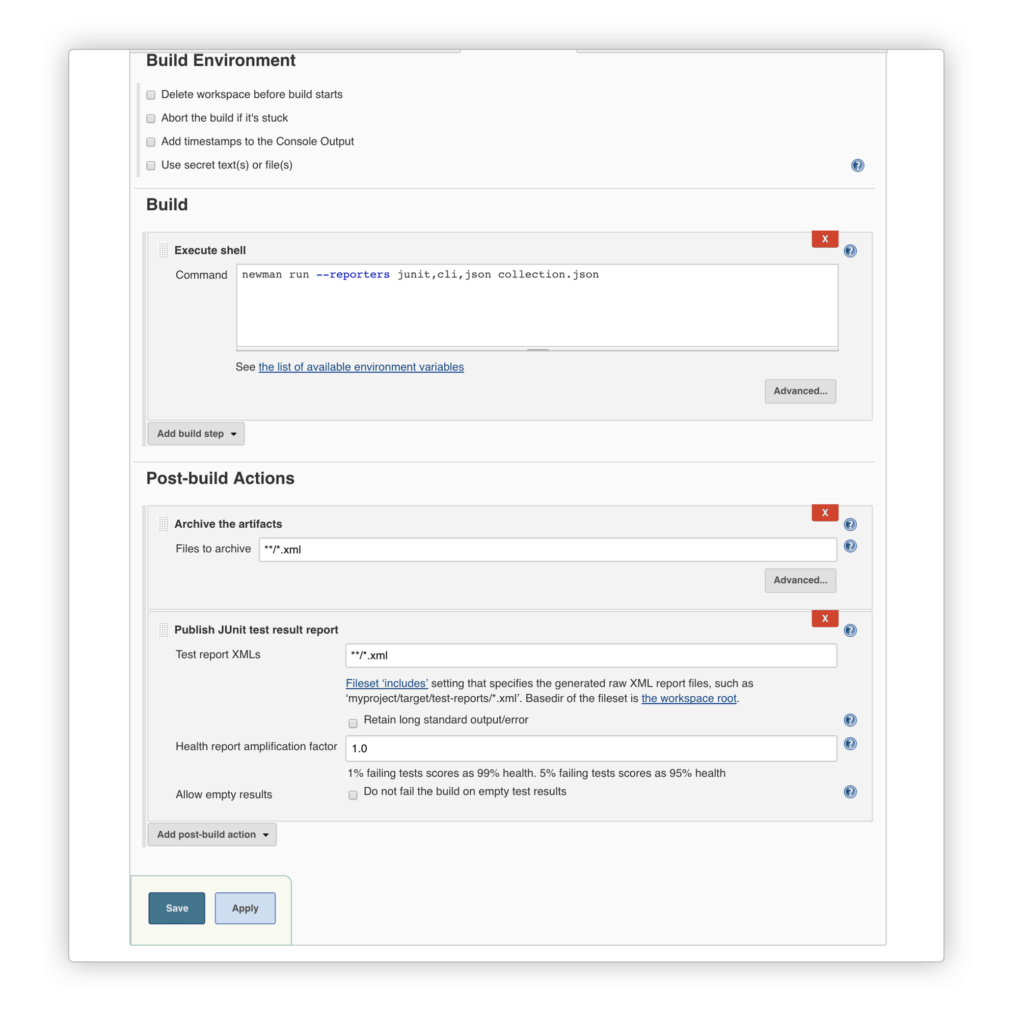

3) Add a post-build step with “Execute Shell” (or “Execute Windows Batch Command” if your Jenkins is running on a Windows machine). You’ll use the same command you used to run it from your own command line earlier (assuming you’re using the same OS) except your path should now just be collection.js, as you named it `newman run collection.json` in the File Parameter name field.

4) Now test it and run the build. I just uploaded the collection.json since I’m not using the environment file yet, but you can add it to the command line with:

`newman run collection.json –e environment.json`

To make sure everything worked as it should, check what the tests did – which should be adding some new test cases to your project.

Furthermore, if you want to use the built in JUnit Jenkins viewer, you can archive the XML test result and point the tests to it. Here is a sample of how you might archive and use the JUnit test results. If you’re using Tricentis qTest Manager, you can also download the Jenkins qTest Manager plugin here.

Upload results to qTest via the API

At this point, we have successfully written tests that run with our CI job. We could fail the build here if the tests fail (great idea for API tests!), but I think we should also upload the test results to Tricentis qTest to give evidence of these tests passing or failing. To do so, we can use a script that I wrote, which you can find here.

1) To use this script, we’ll use the .json reporter from Newman.

In that folder, you should find your sample Newman test results. If you want to try out this node script without setting up tests in Postman, you can, but you will want to modify the .json test result file to match the data with your own project. In the example below, you will want to change the test case IDs to match test case IDs from your own project.

2) Now we’ll run the script with the command

node uploadNewmanToQTest.js -f newman-json-result.json -c creds.json -i true -r “([0-9]+)-*?”

The part after the -r option is a bit scary. It’s a JavaScript regular expression that tells the script where to look for the test case ID (or name if -i false were present). This gets the first digits and uses them as the test case ID. By default, the entire test case name in the results will be used if no regular expression is provided. For example, if the test case name is “Verify Successful Login” and -i false (using test case name instead of ID), then it will look for a corresponding test case with the name “Verify Successful Login.” Of course, if this name appears twice, it will update associated test runs with both test cases. This script has a lot of options and not all of them are completed. If there is something you’d like to see, don’t hesitate to comment or drop a line to Tricentis.

And that’s it, as you should get a successful output!

Of course this is just one example of many for how to do API testing. You can also check out this Postman tutorial and this Postman & Jenkins introduction for even more great information.

If you have any specific requests, please comment below and I will do my best to respond. Otherwise, happy testing!