Objective:

The goal of this activity is to help you recognize the characteristics of a well-structured prompt and practice refining prompts to make them clear, specific, and actionable for the testing tasks.

Steps:

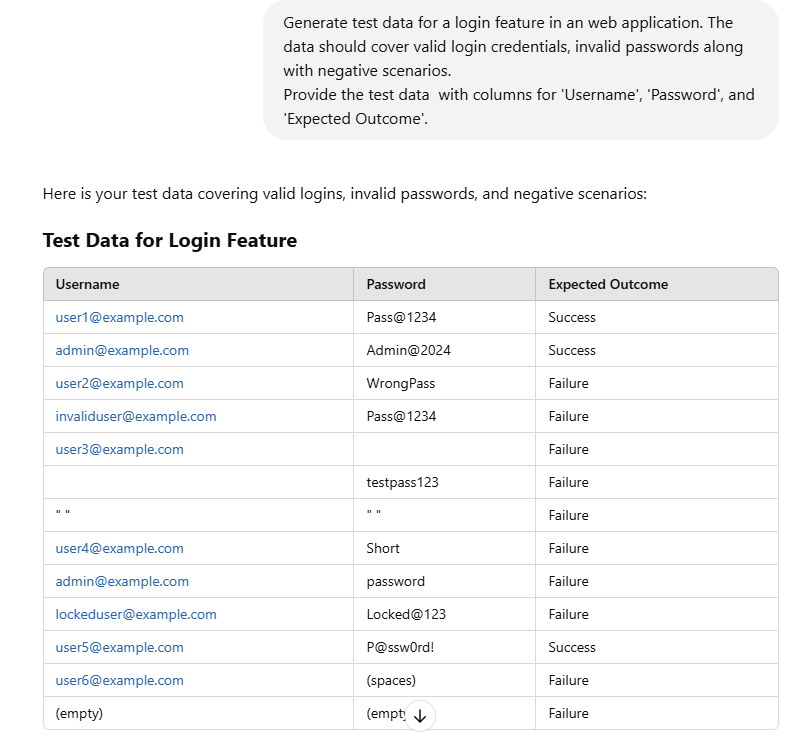

- Review the Given Prompt: Here’s a poor prompt for a testing task:

"Give me some test data for this app."

- Identify Issues: Consider why this prompt might not yield useful results. Think about missing details like the type of app, testing scope, expected tests, usage of the output produced, output format, or any specific testing techniques.

- Improve the Prompt: Rewrite the prompt to make it more precise and useful

- Share and Discuss: Share your refined prompt with others and discuss how different refinements impacted AI responses.