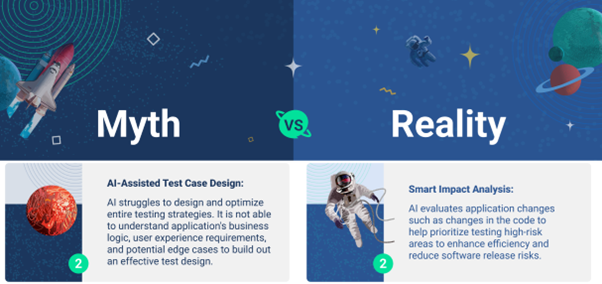

🔍 Let's dive into Day 2 of our AI Testing Myths vs. Realities Challenge!

Time for some deep dive discussions! Share your thoughts on the capabilities of AI in optimizing testing strategies.

Join the conversation, share your insights, and tell us which one you think is the reality and which is the myth and explain your reasoning in the comments below for a chance to win a ShiftSync gift box!

Click here to check the rest of the questions.