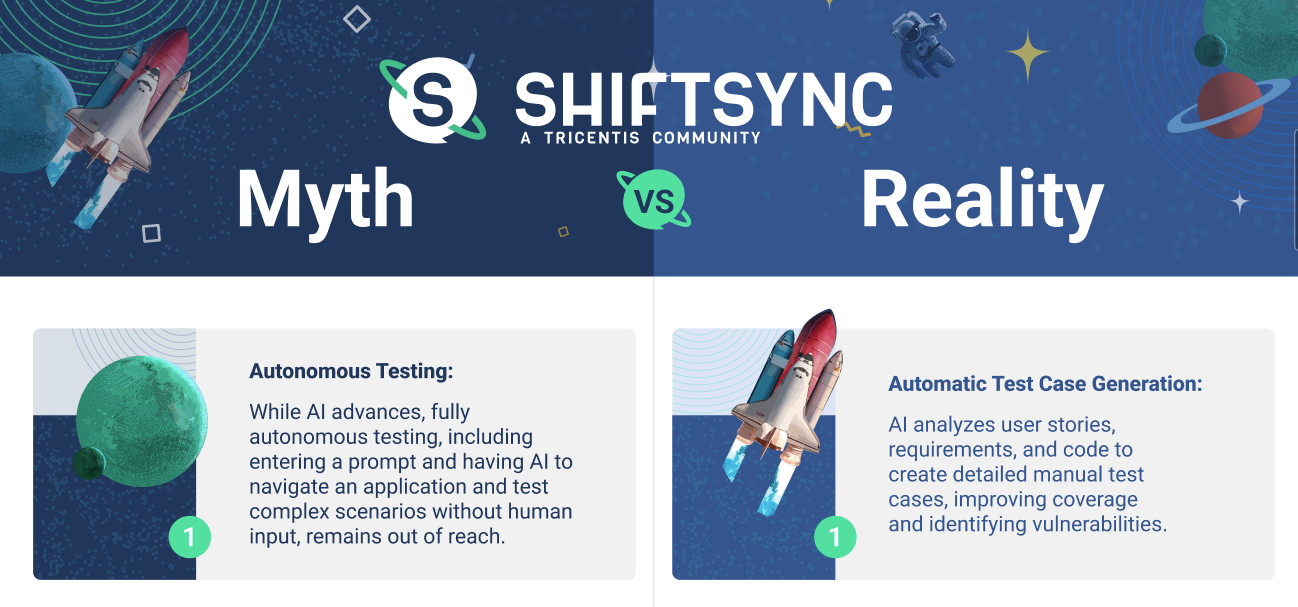

Day 1 of our AI Testing Myths vs. Realities Challenge kicks off today!

The challenge is to select which option is a Myth and which one is a reality and explain your reasoning.

We’re starting by exploring the capabilities of AI in testing.

Join the conversation, share your insights, and tell us which one you think is the reality and which is the myth in the comments below for a chance to win a ShiftSync gift box!

Click here to check the rest of the questions.