We're thrilled to announce our first blogathon , and we warmly invite you to participate and showcase your exceptional technical and writing skills!

This is not your average competition, folks. It's a chance to connect with like-minded individuals, flaunt your expertise, give your article the spotlight it deserves, and who knows, you might just snag some prizes along the way!

To encourage more people towards blog writing, ShiftSync is launching a one-month Blogathon contest from July 19, 2023, to August 18, 2023.

Top 3 winners will get monetary prizes, badges, and certificates of recognition.

-

🥇First prize – 300 USD + Swag

-

🥈Second prize – 150 USD + Swag

-

🥉Third prize – 50 USD + Book of the industry expert

In addition, we will have some outstanding entries who will receive certificates of participation and ShiftSync badges (based on your points).

If you have any concerns or need assistance, feel free to reach out to shiftsync@tricentis.com or message

You must watch out for these dates:

-

Blog submission last date – July 30, 2023

-

ShiftSync assessment – August 16, 2023

-

Winner announcement – August 18, 2023

After blog submission, you can vote for your fellow users’ blogs. The most popular blogs in terms of likes and comments will be shortlisted. Public voting is open for 14 days (about 2 weeks) after submission. In other words, if you submit the blog on July 19, your votes will be counted for 14 days, starting from July 19 to August 1. If you submit your blog on July 30, you will still get 14 days of voting, which means your votes will be counted from July 30 till August 12.

Please make sure that you submit your blogs on or before July 30, 2023. Post July 30, your blogs won’t be accepted.

ShiftSync will finalize the top 3 winners based on public voting.

An opportunity for you all to create blogs on the new community and win prizes. We’re encouraging you to create some meaningful and engaging content with utmost brevity. Get ready to awaken your creative muse. Grab the opportunity to educate your fellow users as well as encourage them to be a part of it.

How to be a part of this Blogathon?

-

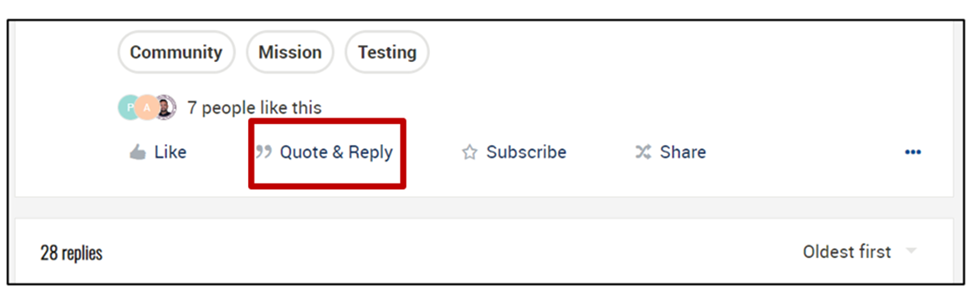

Submit your blog to the ShiftSync community. Please reply to the blogathon topic. Screenshot attached.

- Create your original blog posts (between 300-1000 words) and cover ONE of the following topics:

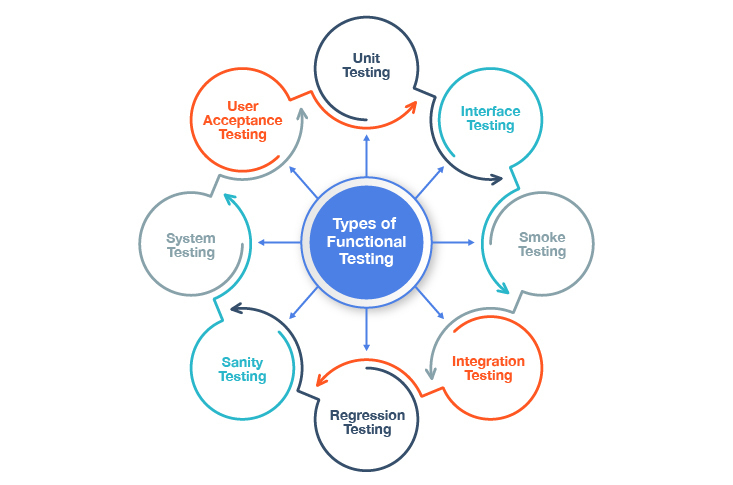

- 📃Test Automation for Mobile Applications –This is a broad topic, so feel free to pick your own point of view: from how it contributes to company success or share some examples from your experience (both positive or challenges and learnings).

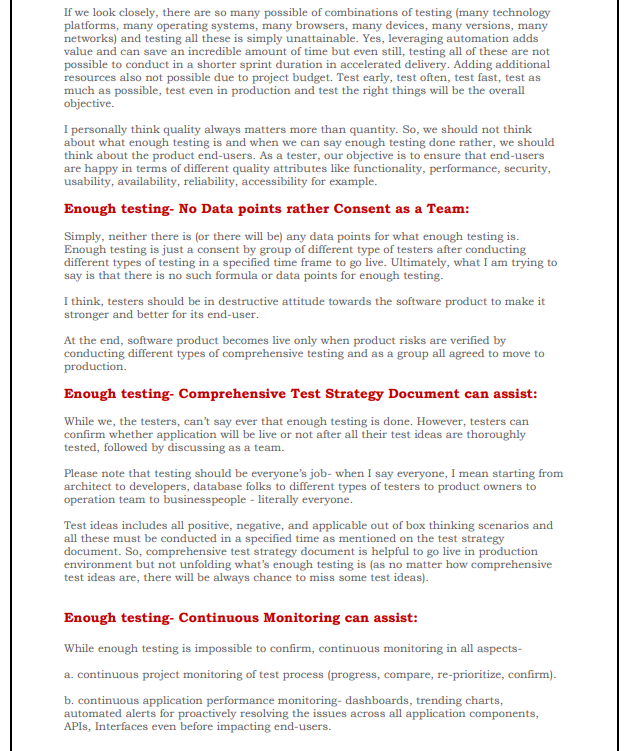

- 📃What's enough testing?

- 📃Integrating OAuth 2.0 in C# Microservices: A Step-by-Step Guide.

- Follow the rules:

- An author can submit multiple blogs; however, they must cover the topics listed above

- The entry should be in English

- The entry should not have multiple authors

- Don't forget to mention the title of the blog that you chose

- Please include images (not more than 5) to make your blog visually appealing. You can use images from pexels or unsplash

Who can vote?

- Anyone

- You cannot vote your own blog but go ahead and activate your network and friends, have them vote for you and get your name and article to the top of the list!